Nvidia Q3 Earnings

Great print, Trailing indicator of AI bubble, Value capture, AI lab profitability concerns, Networking business, Meta, China & Taiwan, Google, and more

Nvidia delivered a beautiful Q3 print on Wednesday, and there are several angles worth unpacking. But first, why was there any doubt about Nvidia’s earnings anyway? Yes, the AI concerns are very legitimate, but Nvidia’s earnings will be a trailing indicator of an AI bubble. We’ll discuss.

Then we can get into the call itself, including:

Networking business

Meta

China & Taiwan

Google

AI lab profitability concerns

Here we go:

Nvidia’s Earnings

From The WSJ,

Nvidia reported record sales and strong guidance Wednesday, helping soothe jitters about an artificial intelligence bubble that have reverberated in markets for the last week.

Sales in the October quarter hit a record $57 billion as demand for the company’s advanced AI data center chips continued to surge, up 62% from the year-earlier quarter and exceeding consensus estimates from analysts polled by FactSet. The company increased its guidance for the current quarter, estimating that sales will reach $65 billion—analysts had predicted revenue of $62.1 billion for the quarter.

Another incredible quarter from Nvidia and an even more impressive guide.

Of Course Nvidia Would Deliver

You didn’t expect anything less, right?

Forget the noise about stock market jitters, OpenAI profitability, or Michael Burry’s depreciation math. This quarter’s demand was locked in months ago, and Nvidia’s only job was to ship the hardware already spoken for.

After all, Nvidia’s direct and indirect customers signaled their GB300 demand quarters ago. Nothing since has altered that trajectory.

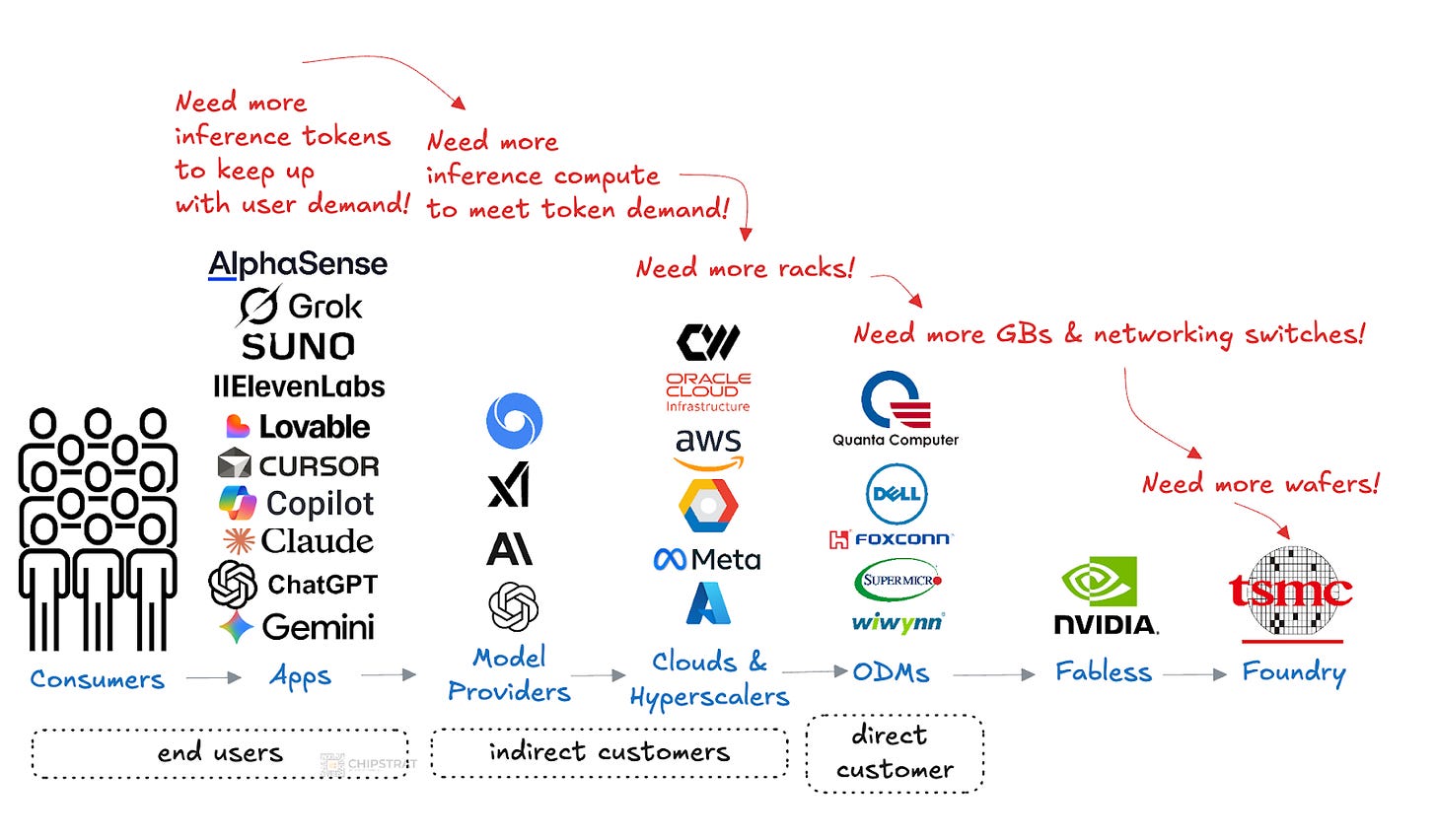

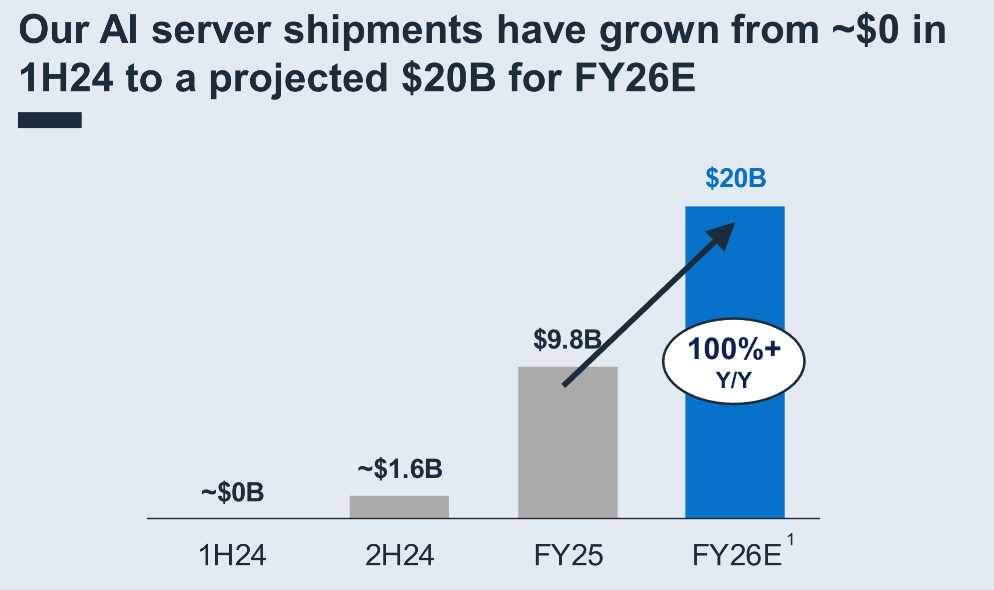

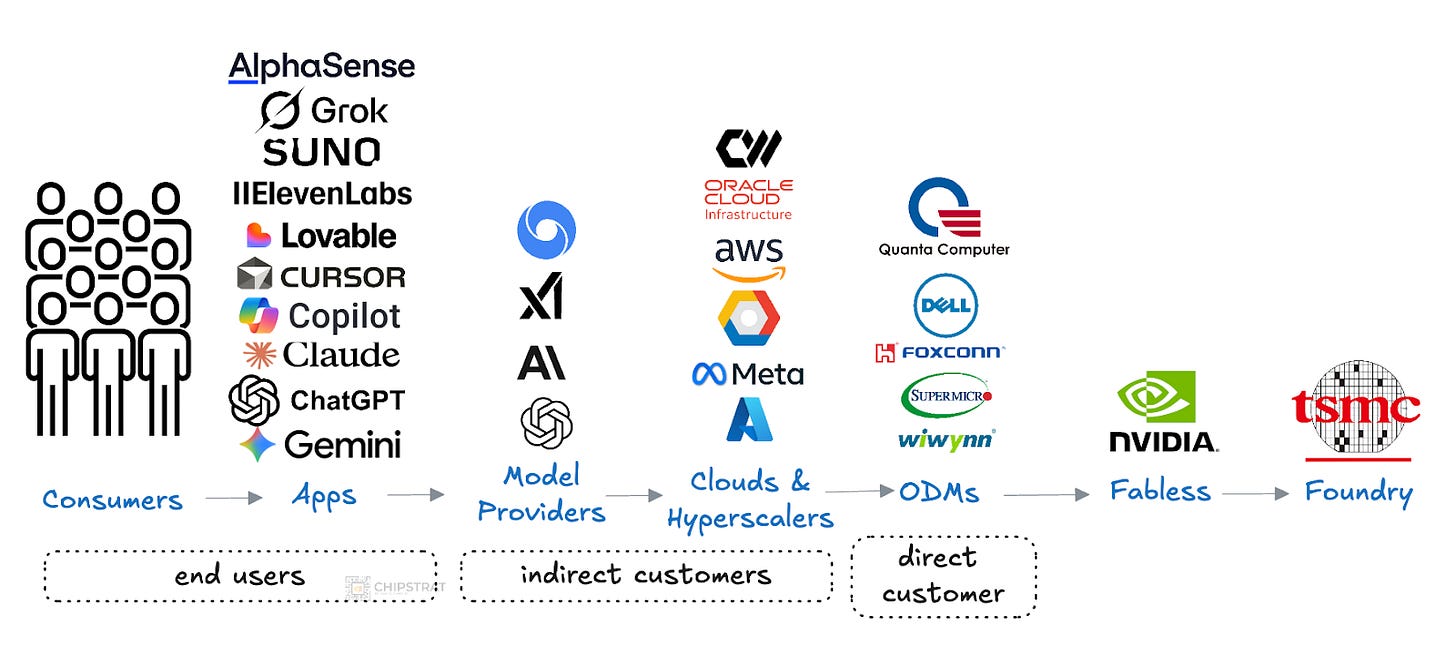

As a reminder, Nvidia mainly sells to ODMs and system integrators, who purchase the GPUs, build the racks, and deliver the AI factories to the clouds and hyperscalers:

Orders are planned well in advance of any given quarter. System builders like Dell, SuperMicro, Wiwynn, Quanta, Foxconn, are buying a ton of Grace Blackwell Ultras right now because Microsoft, Amazon, Google, Meta, Oracle, and CoreWeave already placed orders.

And we have confidence that Nvidia’s 2026 guide is legitimate because we’ve been told as much, up and down the chain:

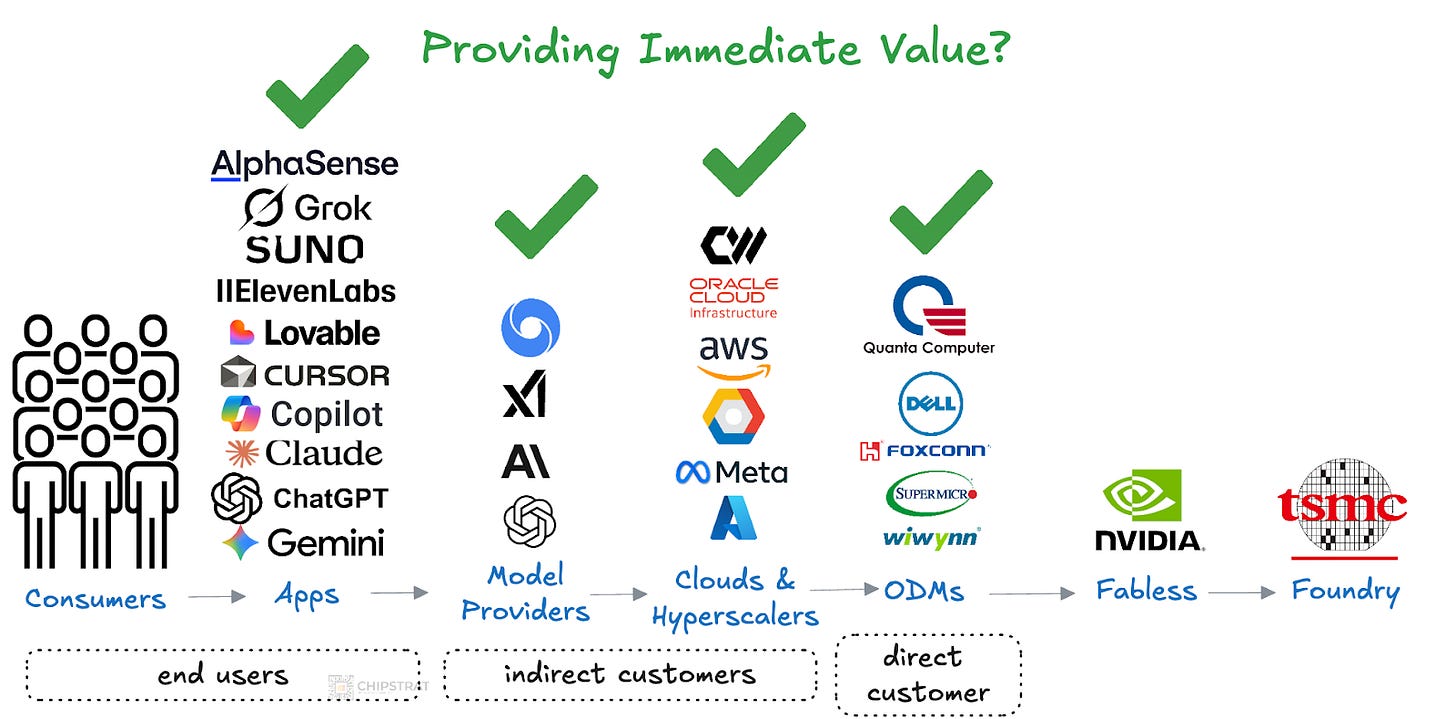

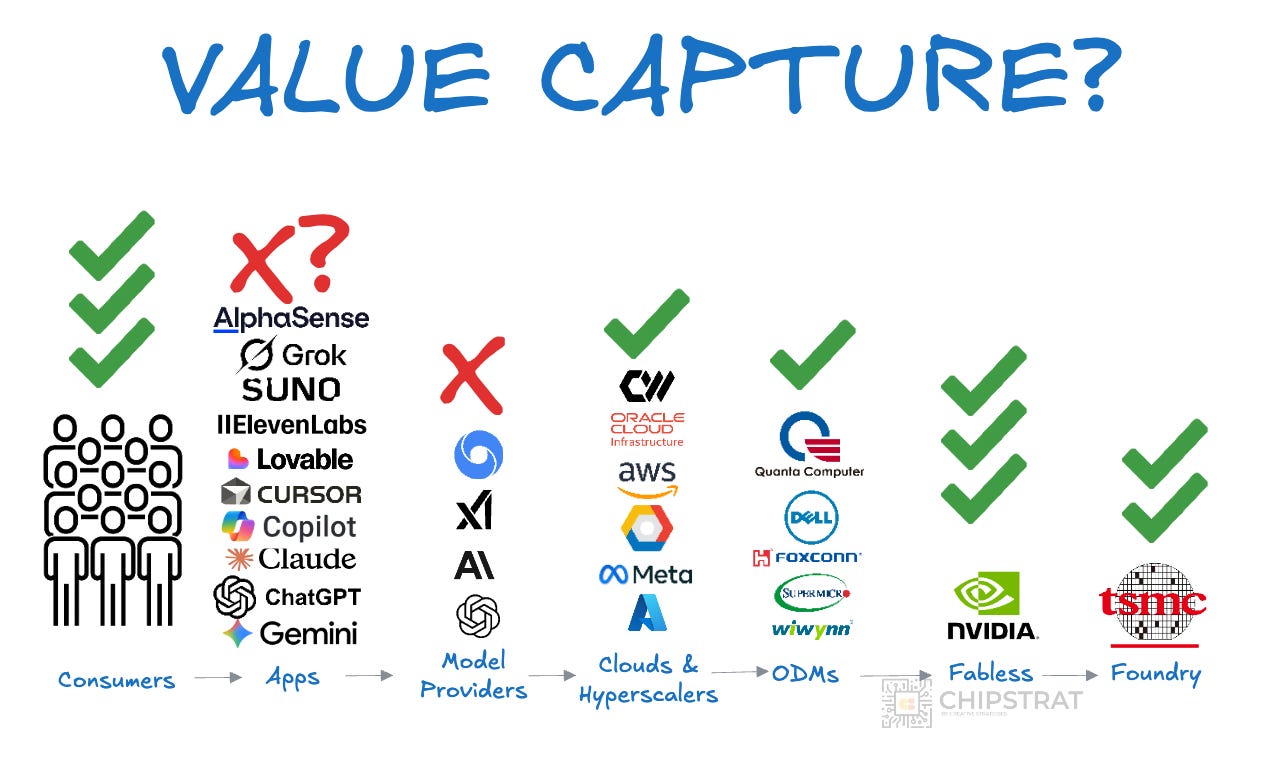

Obviously, many GenAI-powered apps are already being used seriously day to day. Again, the illustration above only scratches the surface. All those apps are saying they need more inference tokens to meet user demand. Which means clouds, neoclouds, and hyperscalers need more AI clusters. Which means ODMs need to build more racks. And ODMs need more of Nvidia’s silicon - GPUs, CPUs, and Networking switches:

Which means Nvidia needs more wafers and packaging from the TSMC ecosystem. And TSMC does not take this request lightly. As we recently discussed,

Building [foundry] capacity at the leading edge is extraordinarily capital-intensive. And overbuilding is catastrophic; once built, depreciation and maintenance keep running whether wafers are sold or not. Gotta keep the fabs full.

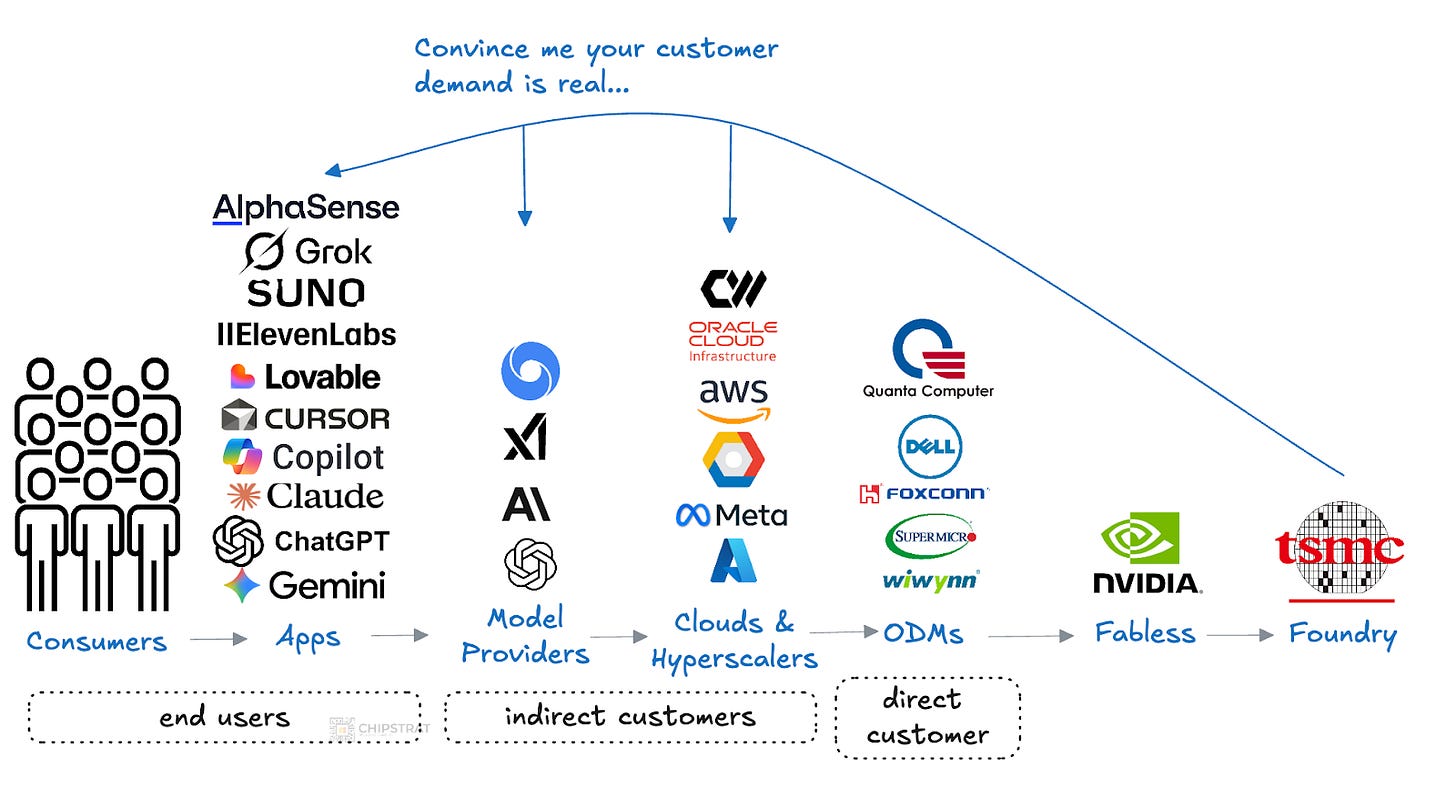

When Nvidia asks for more wafers, TSMC is now saying, “I trust you, but I need to verify.” TSMC CEO C.C. Wei said on the recent earnings call that today’s demand is “insane”, and it’s accelerating. Hence, needing to verify:

CCW: The AI demand actually continue to be very strong, it’s more -- more stronger than we thought 3 months ago, okay? So in today’s situation, we have talked to customers and then we talk to customers’ customer.

TSMC needs to be convinced that the demand is legitimate:

And what does TSMC see out there?

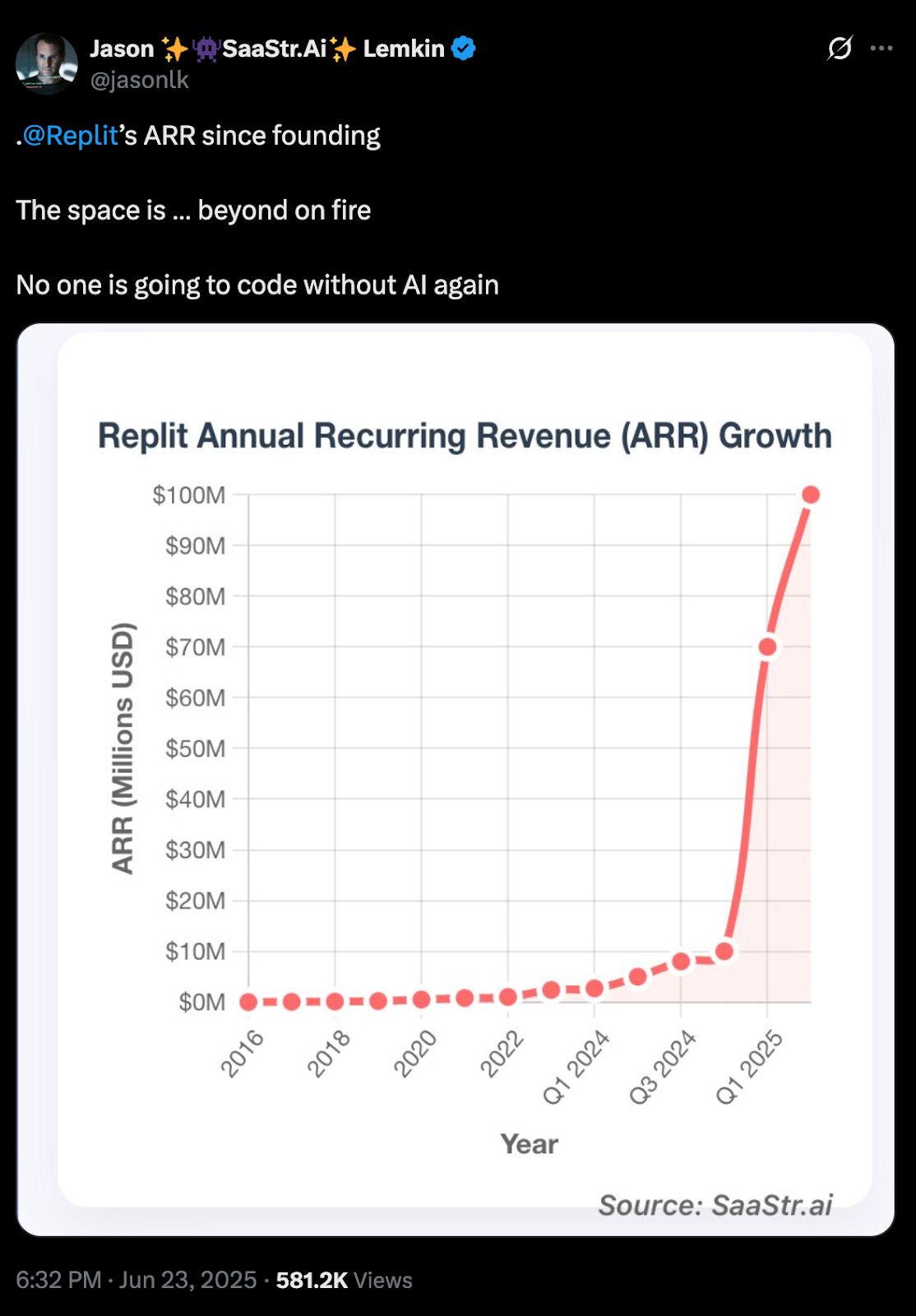

GenAI powered startups are racing to $100M ARR in record time:

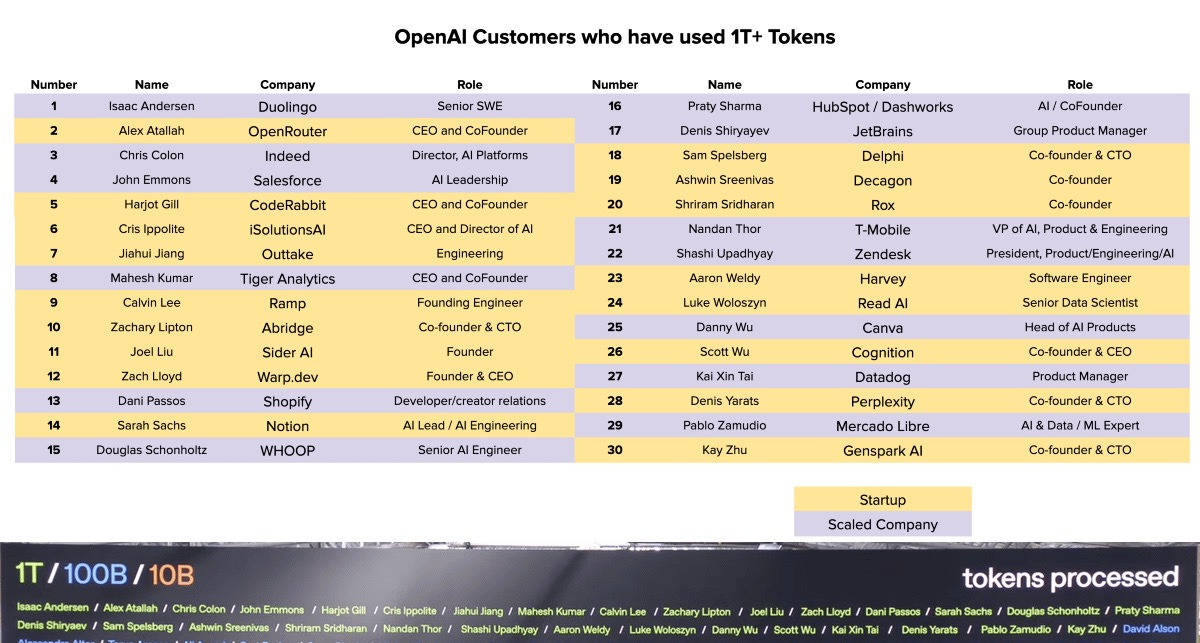

It’s not just coding apps. Some some companies that use OpenAI tokens heavily include Duolingo, Ramp, Shopify, WHOOP, and Canva.

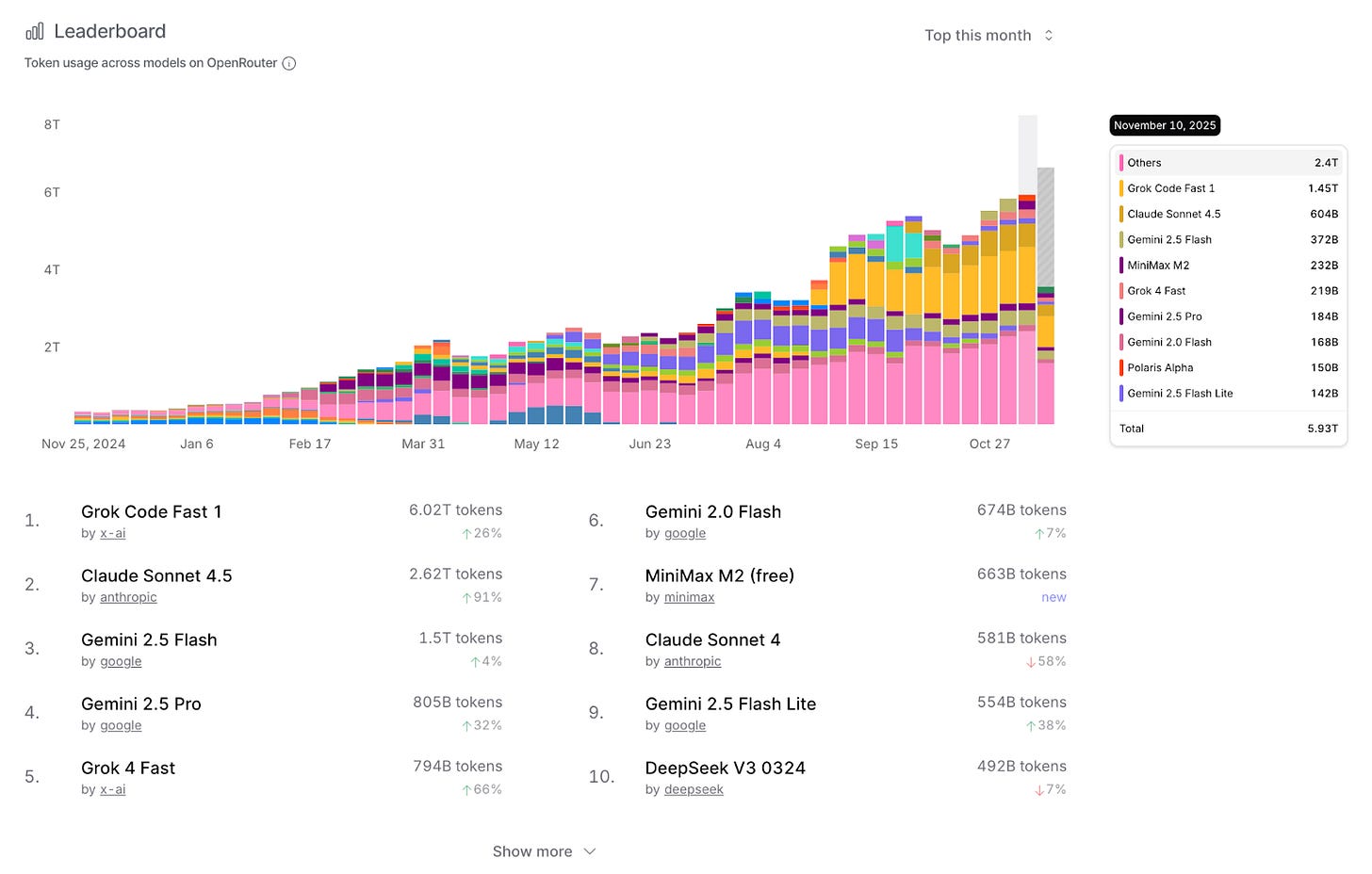

The app layer is consuming a ton of tokens, placing significant demands on model providers. It’s real. And it’s not just OpenAI. Check out the token growth across the ecosystem just this year, as captured by a middleman OpenRouter

Tons of Grok, Claude, Gemini, MiniMax, and DeepSeek tokens.

App layer demand is real, and downstream demand at CSPs, neoclouds, and hyperscalers is real.

So TSMC believes. These are TSMC’s customers’ customers.

CC Wei: We directly received very strong signals from our customers’ customers, requesting the capacity to support their business. Thus, our conviction in the AI megatrend is strengthening and we believe the demand for semiconductor will continue to be very fundamental.

TSMC goes so far to call it an “AI megatrend” and believes it’s fundamental and here to stay.

This is corroborated by hyperscalers' earnings calls; demand is accelerating, so 2026 CapEx will be even higher than 2025. Here’s what the CFOs are saying:

Alphabet CFO Anat Ashkenazi:

We’re continuing to invest aggressively due to the demand we’re experiencing from Cloud customers as well as the growth opportunities we see across the company.

Microsoft CFO Amy Hood:

Next, capital expenditures. With accelerating demand and a growing RPO balance, we’re increasing our spend on GPUs and CPUs. Therefore, total spend will increase sequentially, and we now expect the FY ‘26 growth rate to be higher than FY ‘25.

Amazon CFO Brian Oslavsky:

We’ll continue to make significant investments, especially in AI, as we believe it to be a massive opportunity with the potential for strong returns on invested capital over the long term…Looking ahead, we expect our full year cash CapEx to be approximately $125 billion in 2025, and we expect that amount will increase in 2026.

Meta CFO Susan Li:

We currently expect 2025 capital expenditures, including principal payments on finance leases to be in the range of $70 billion to $72 billion, increased from our prior outlook of $66 billion to $72 billion… We are still working through our capacity plans for next year, but we expect to invest aggressively to meet these needs, both by building our own infrastructure and contracting with third-party cloud providers

And of course that means ODM/OEM/system integrator demand is skyrocketing too. From Dell’s earnings call back in August:

Nvidia’s upstream and downstream supply chain told us they all need more Nvidia.

So, regardless of recent market jitters, of course Nvidia would deliver this quarter.

But What About The Concerns?

Yet, there are legitimate concerns about an AI bubble.

The concern is not that AI isn’t useful. The value consumers experience is real. And concerns of overbuilding are misguided. The whole ecosystem is ramping as fast as possible to deliver more demanded value.

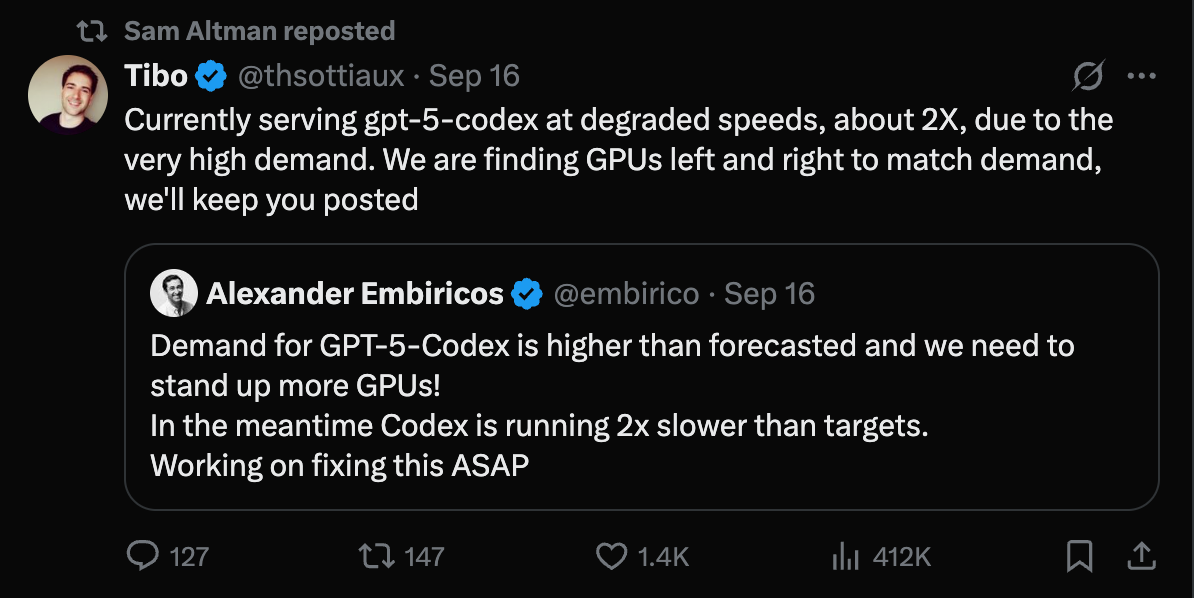

This is not stranded capacity sitting idle the way dark fiber once did. The GPUs/XPUs aren’t sitting idle. Rather we see tweets about “finding GPUs left and right to match demand”:

Yes, power is a constraint, but Jensen is helping searching every nook and cranny for available power:

Jensen Huang: Our network of partners is so large that we will find nooks and crannies of power — large scale, medium scale, small scale – in different parts of the world. And so this is a huge advantage of ours.

There’s obviously value being created up and down the chain, accruing to end users, and the whole ecosystem is racing to overcome constraints. Find power, plug in racks, deploy models, serve tokens.

SO WHAT’S THE PROBLEM THEN?!

From OpenAI profitability to circular financing to depreciation math, all the concerns really get at one thing: profitability.

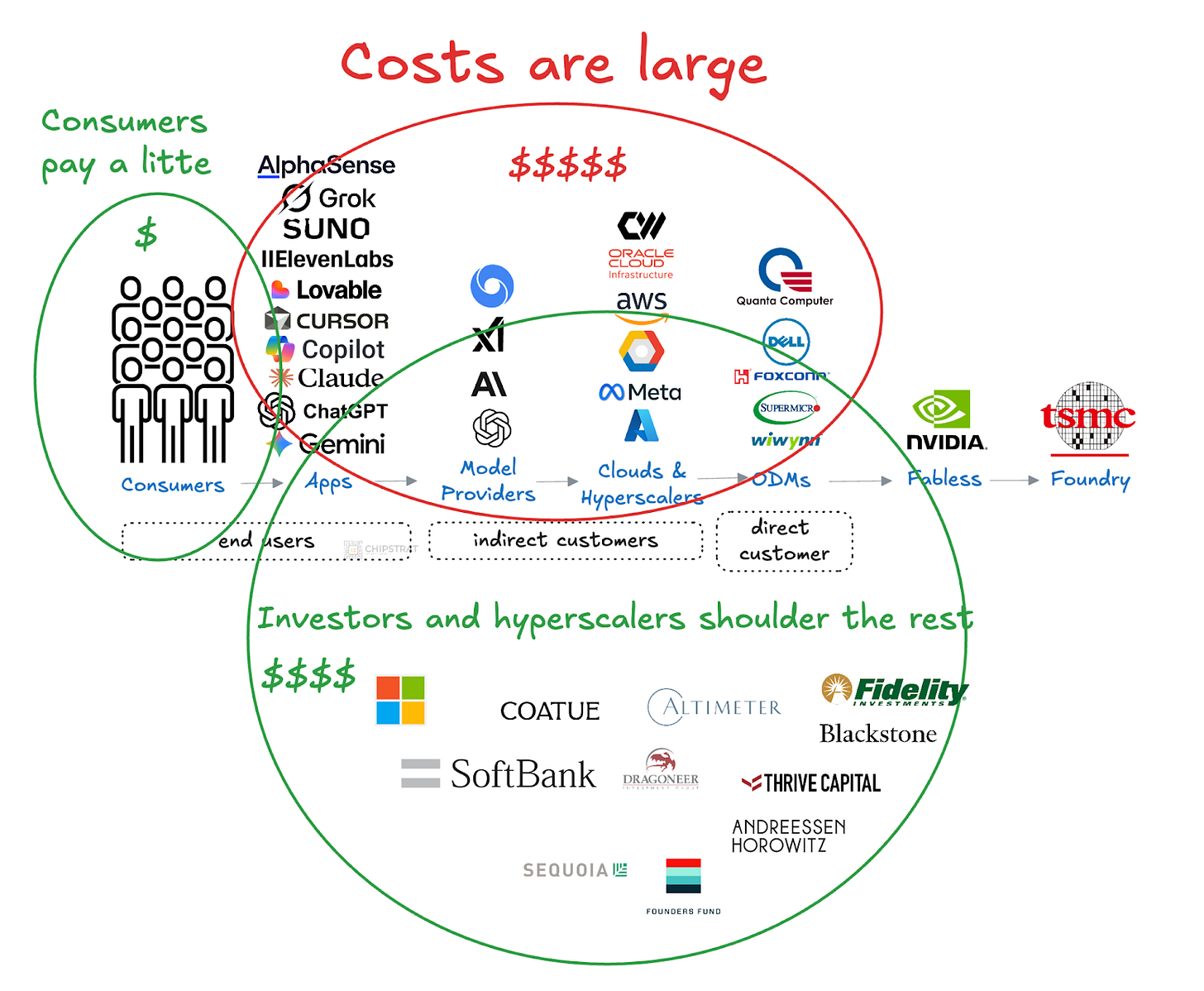

There’s obviously a surplus of consumer value at one end of the chain. But not enough value capture across the chain.

After all, the costs of this era are much higher: the cloud era was all about cheaply creating and retrieving data (social media, SaaS apps, YouTube) but now we’re talking about generating information on demand. That’s much more computationally expensive. And it increases the legacy costs (cloud storage and retrieval) too. Generating Sora videos is expensive, but then OpenAI has to store them all and serve them back up too…

Who is paying for all the machinery to work?

The brunt of the costs are not shouldered by consumers, nor by model providers. The subsidization comes from the investors funding external and internal model labs: Microsoft, Amazon, Google, Meta, venture firms, and private credit’s off-balance sheet special purpose vehicles.

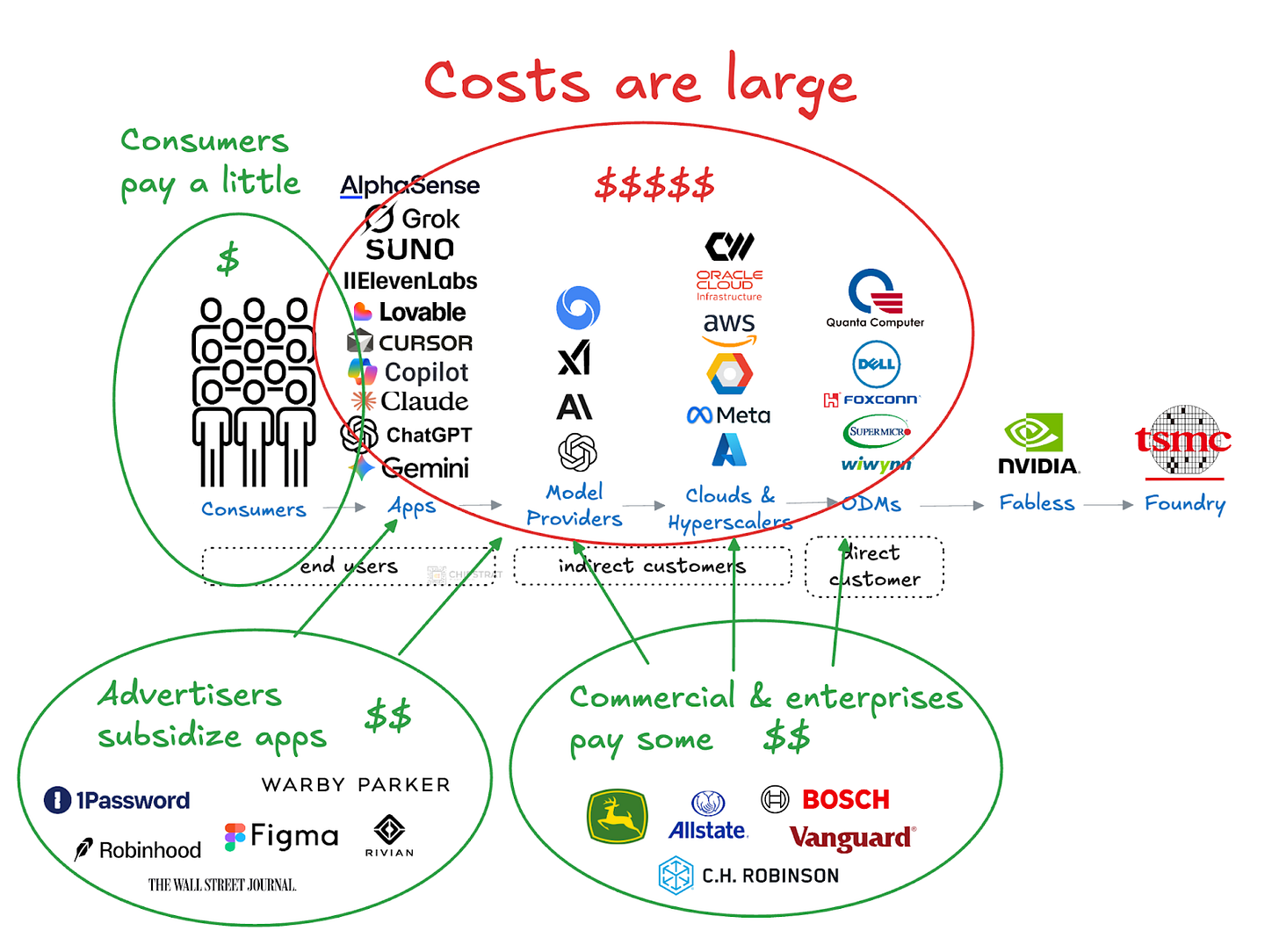

In the long run we need a model where every participant pays for value they capture such that the whole ecosystem can stay in business. That likely means consumers pay a bit via subscriptions, commercial and enterprise spend on APIs, GPU rentals, or on-prem systems, and advertisers covering the balance in return for placement inside AI apps:

The risk is a pause in infrastructure buildout before this model fully forms.

What if private AI labs can’t get more funding?

What if Meta can’t turn AI CapEx investments into new topline revenue?

What if GPU/XPU rental demand peaks, and prices start to drop?

What if OpenAI struggles to get an auction-based ad system up and running?

Each of these pressures works against profitability.

It’s not hard to imagine a downside case where investors pull back from subsidizing inference, leaving AI labs slow to raise new capital and wary of down rounds. Their token capacity growth would taper, and hyperscalers would respond by dialing back forward CapEx:

This seems fairly improbable, but then Altman is now talking to employees about economic headwinds due to increased competition from a profitable competitor. Just yesterday, The Information reported:

OpenAI CEO Sam Altman told colleagues last month that Google’s recent progress in artificial intelligence could “create some temporary economic headwinds for our company,” though he added that OpenAI would emerge ahead.

If near-term revenue environment gets harder for OpenAI, might investors get cold feet?

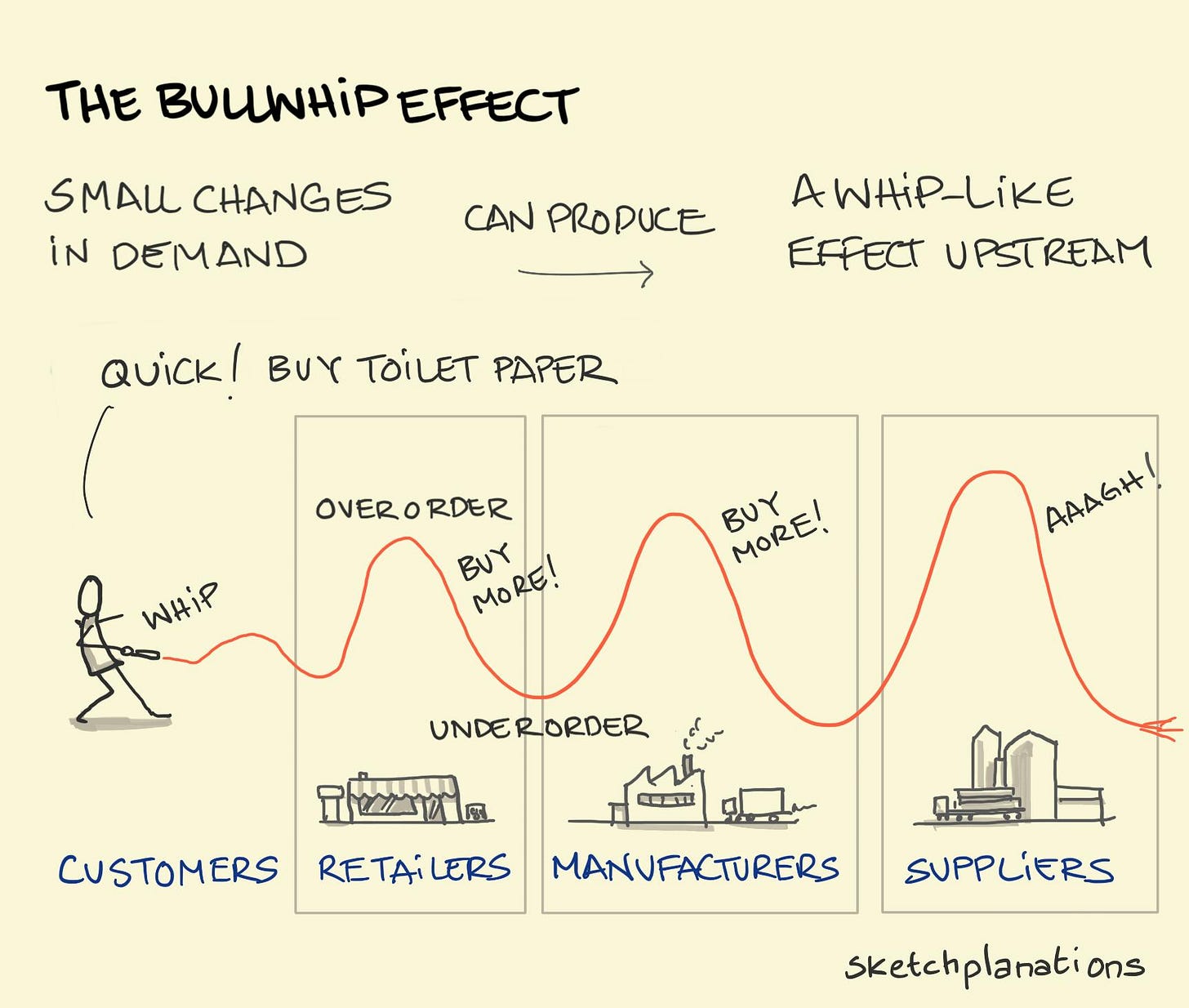

But even if such a pause were to play out, we will see it coming. It will not arrive as a shock in a single Nvidia print. Nvidia will be the final step in the chain; a trailing indicator.

Nvidia’s print won’t be the leading indicator of a bubble deflating.

Play it out. If investors doubted OpenAI’s ability to fund more compute, its infrastructure partners such as Microsoft would slow their forward CapEx plans. That would show up on earnings calls. ODMs would see hyperscaler rack-build orders flatten, and their earnings would reflect it. Nvidia would feel the impact only after that sequence because its production depends on long lead times and multiquarter commitments. The current quarter would still look solid, but the forward guide would come down.

To reiterate, any slowdown would unfold over several quarters rather than show up suddenly in one Nvidia earnings report. The headlines and people questioning Nvidia’s earnings before it reported don’t understand how the ecosystem works.

But couldn’t the AI infra build out come to a screeching halt rather than this telegraphed slowdown? And in that scenario we wouldn’t really know it until Nvidia reported?

Anything is possible, of course. But an abrupt halt in orders would require major buyers to refuse deliveries mid-quarter, and they are heavily disincentivized to do that even under extreme shocks (serious geopolitical tensions, pandemic, private credit market collapse, etc). Walking away from committed orders would seriously strain relationships with ODMs, OEMs, and Nvidia, and would push the company to the back of the allocation line.

AI demand is real and long lasting (“a fundamental megatrend”), and access to the latest systems will remain a competitive edge. In uncertainty, hyperscalers are far more likely to trim future plans than cancel current commitments.

Thus, any adjustment would move through the chain with normal lead times, not as a sudden collapse.

Which, again, means we should NOT look to Nvidia as a leading indicator of an AI bubble deflating. Rather, we’d look to AI lab profitability, which is hard given that they are private companies. So the best place to look is cloud providers.

Nvidia, and even more so TSMC (and WFE companies), will be at the end of the bullwhip.

Of course, everyone is working to avoid that scenario. But regardless, look to the head of the whip for signals.

Earnings Takes

Ok, let’s get into takes from the earnings call.

Largest Networking Business In The World

Nvidia’s CFO Collete Kress gave nice color on the networking business:

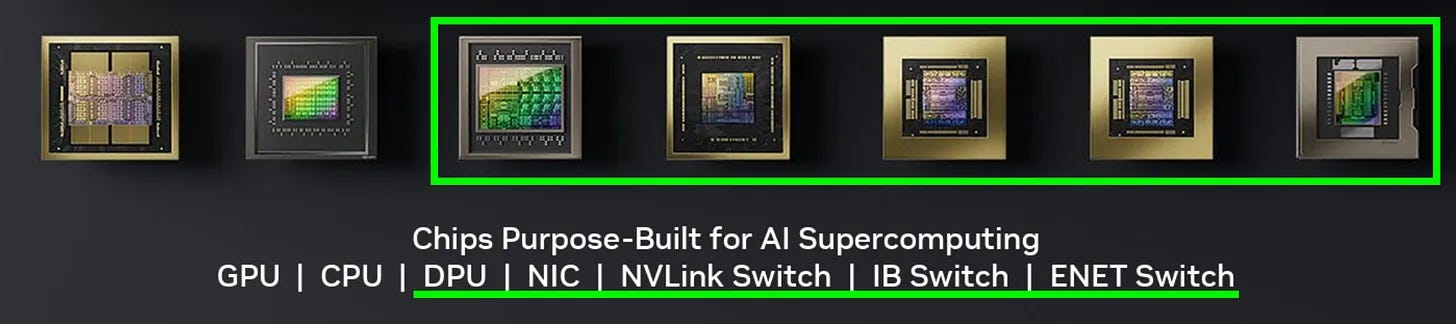

CK: Our networking business—purpose built for AI and now the largest in the world— generated revenue of $8.2 billion, up 162% year-over-year with NVLink, InfiniBand and Spectrum-X Ethernet, all contributing to growth.

Whew. $8.2B quarterly revenue from networking alone.

Largest in the world? Is that largest AI networking business in the world, or just largest networking period?

For reference, quarterly revenues for a few networking competitors

Cisco: $7.8B networking revenue

Broadcom: $9.2B semiconductor solutions revenue, but this includes custom AI ASICs in addition to networking switches

Arista: $2.3B total revenue, with $1.9B product

HPE (+Juniper): $1.7B quarterly networking revenue

Seems Nvidia is right, they are the largest networking business in the world, period. A $4.4T market cap seems pretty justified as the largest compute AND networking business in the world.

Random thought: It would be interesting to crunch the numbers and see how much daily data movement occurs due to AI inference and training, and compare that to all other daily traffic i.e. for cloud, mobile, video streaming etc.

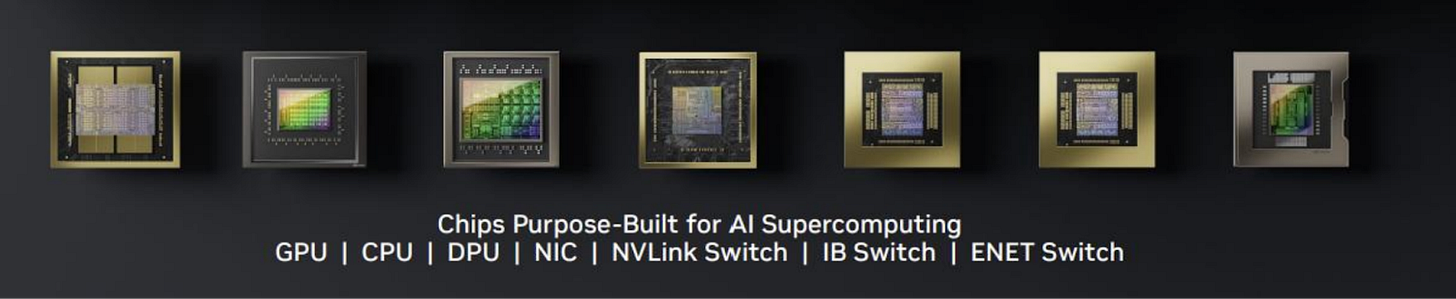

Recall that Spectrum-X was designed with AI in mind just like Infiniband. Quick refresher here:

If you thought scale-out Ethernet would erase Nvidia’s networking lock-in, that doesn’t seem to be playing out, as Nvidia is seemingly replacing, not losing, Infiniband sockets:

CK: We are winning in data center networking, as the majority of AI deployments now include our switches with Ethernet GPU attach rates roughly on par with InfiniBand.

This implies customers find the Spectrum-X performance vs competitors strong enough to justify staying within Nvidia’s ecosystem and any TCO trade-offs.

By the way, if Spectrum-X accounts for a decent portion of a $32B ARR business, that’s pretty good considering the product line was only launched 2.5 years ago in May 2023! 🤯

Again, never forget that of Nvidia’s 7 chips, 5 are networking!

In an earned humblebrag, Kress reminded the audience that despite ecosystem progress on UALink and talk of ESUN, Nvidia’s NVLink is battle-hardened:

CK: Currently on its fifth generation, NVLink is the only proven scale up technology available on the market today.

But the wording isn’t quite precise.

To be fair, AMD uses Infinity Fabric to scale up 8 GPUs in MI355X, so Nvidia is not the only vendor with a scale-up fabric in the market.

The precise statement would be that Nvidia is the only vendor with a proven rack-scale scale up technology, since NVLink + NVSwitch supports 72 GPUs acting as a single memory-coherent domain (e.g. GB300 NVL72). AMD’s Infinity Fabric currently only connects 8 MI355X GPUs on a board or tray, but does not yet span full racks with switch-based, all-to-all GPU-to-GPU connectivity. That’s coming with 450 series.

The call emphasized that Nvidia’s networking team continues to push the innovation frontier forward too, with scale up Spectrum-XGS moving data between clusters that are miles apart down to NVLink fusion for coherently connecting heterogeneous chips that are millimeters apart.

CK: We recently introduced Spectrum-XGS, a scale across technology that enables gigascale AI factories NVIDIA is the only company with AI scale up, scale out and scale across platforms, reinforcing our unique position in the market as the AI infrastructure provider. Customer interest in NVLink Fusion continues to grow. We announced a strategic collaboration with Fujitsu in October, where we will integrate Fujitsu’s CPUs and NVIDIA GPUs via and NVLink Fusion, connecting our large ecosystems. We also announced a collaboration with Intel to develop multiple generations of custom data center and PC products, connecting NVIDIA and Intel’s ecosystems using NVLink. This week at Supercomputing ‘25, Arm announced that it will be integrating NVLink IP for customers to build CPU SoCs that connect with NVIDIA.

It’ll be fascinating to watch how Nvidia’s networking business growth plays out in 2026 as the Ethernet scale out ecosystem matures.

Meta

Jensen talked a lot about Meta, and it was intentional.

Meta is accelerating CapEx next year, which means buying more Nvidia GPUs. Investors are worried because Meta doesn’t have a cloud rental business to see some quick ROIC, and the stock is since down 20 percent on that fear.