The Four Horsemen: Google, Microsoft, Amazon, Meta Earnings

Wall Street favors quick cloud returns. But Meta’s betting on turning tokens into high-margin dollars. It’s a playbook it’s already run successfully and investors seem to be overlooking it.

The four horsemen just delivered another quarter of strong growth and record AI infrastructure spending. Not the Book of Revelation’s Four Horsemen, but these guys:

Notice one isn’t like the others! That matches investors reactions to earnings…

Let’s first unpack CapEx spending across the four. Then we’ll dive into the big question: when and where will all this investment earn a return?

And finally, given earnings, how should we feel about The People’s Champion?

No, not Muhammad Ali, but this guy:

CapEx

All four hyperscalers are accelerating their AI infrastructure buildouts, with 2026 spending plans set to climb even higher.

Alphabet

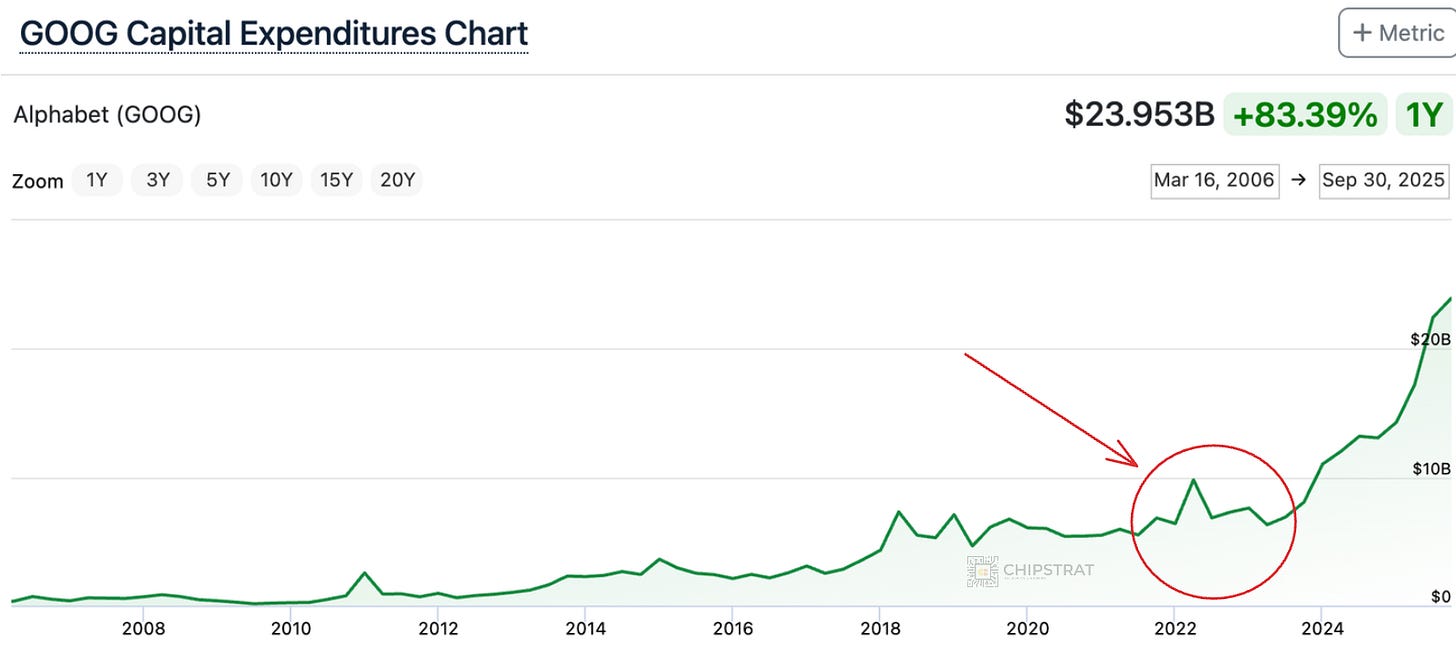

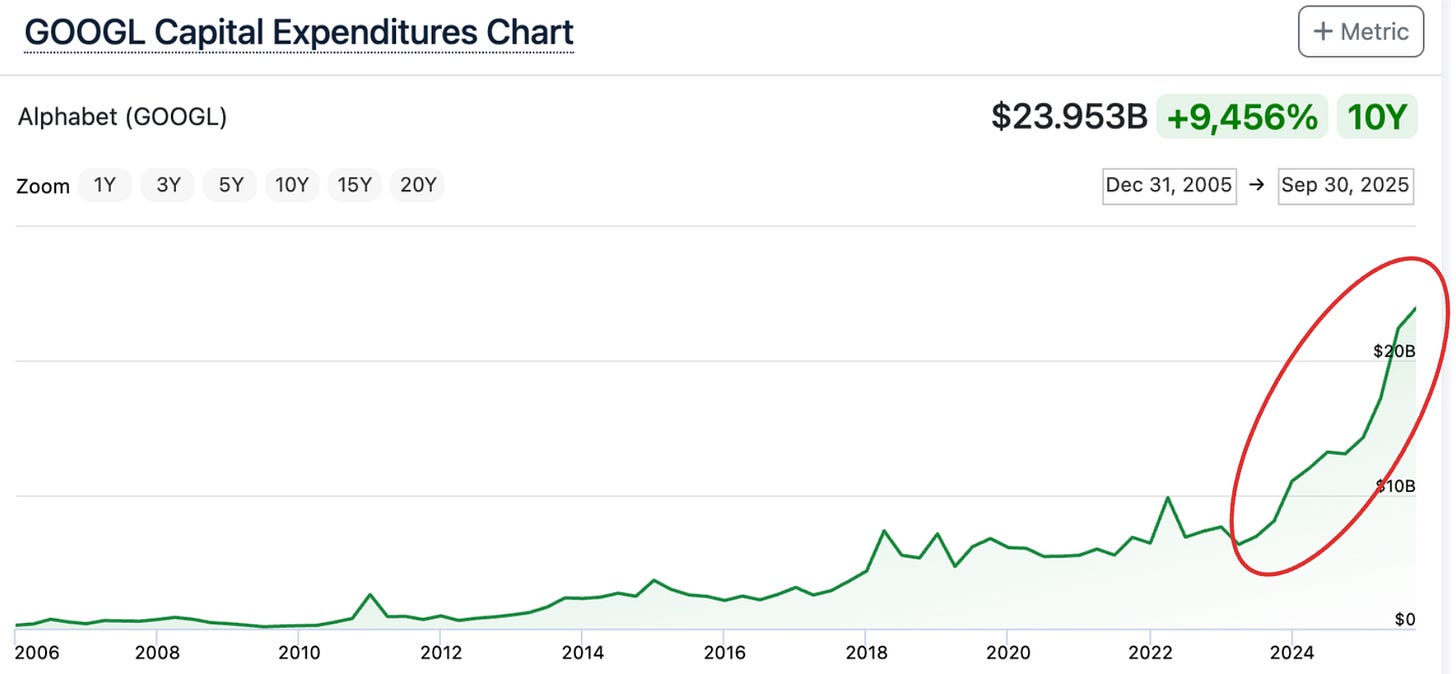

Google is investing aggressively to meet internal and external demand for AI compute, and raised FY2025 CapEx accordingly:

CFO Anat Ashkenazi: We’re continuing to invest aggressively due to the demand we’re experiencing from Cloud customers as well as the growth opportunities we see across the company. We now expect CapEx to be in the range of $91 billion to $93 billion in 2025, up from our previous estimate of $85 billion

A sign of the times: CapEx was bumped up by $6-8 billion!

While that might sound like chump change these days, it wasn’t that long ago that the total quarterly CapEx was $6-8B!

In fact, from IPO up until ChatGPT launch, Google’s quarterly CapEx only once exceeded $8B!

No wonder the entire data center supply chain is up in 2025, from memory and storage to cables and power systems. After all, Google said 60% of CapEx goes to compute (TPUs, GPUs, CPUs) while 40% covers everything else needed to house, power, and connect them.

AA: The vast majority of our CapEx was invested in technical infrastructure with approximately 60% of that investment in servers and 40% in data centers and networking equipment.

The scale and acceleration of AI infra investment shouldn’t surprise anyone. This has been the direction and explicit guidance for several years. The shift to reasoning models in late 2024 and the subsequent surge in inference demand have kicked spending into an even higher gear:

Google CapEx to the moon.

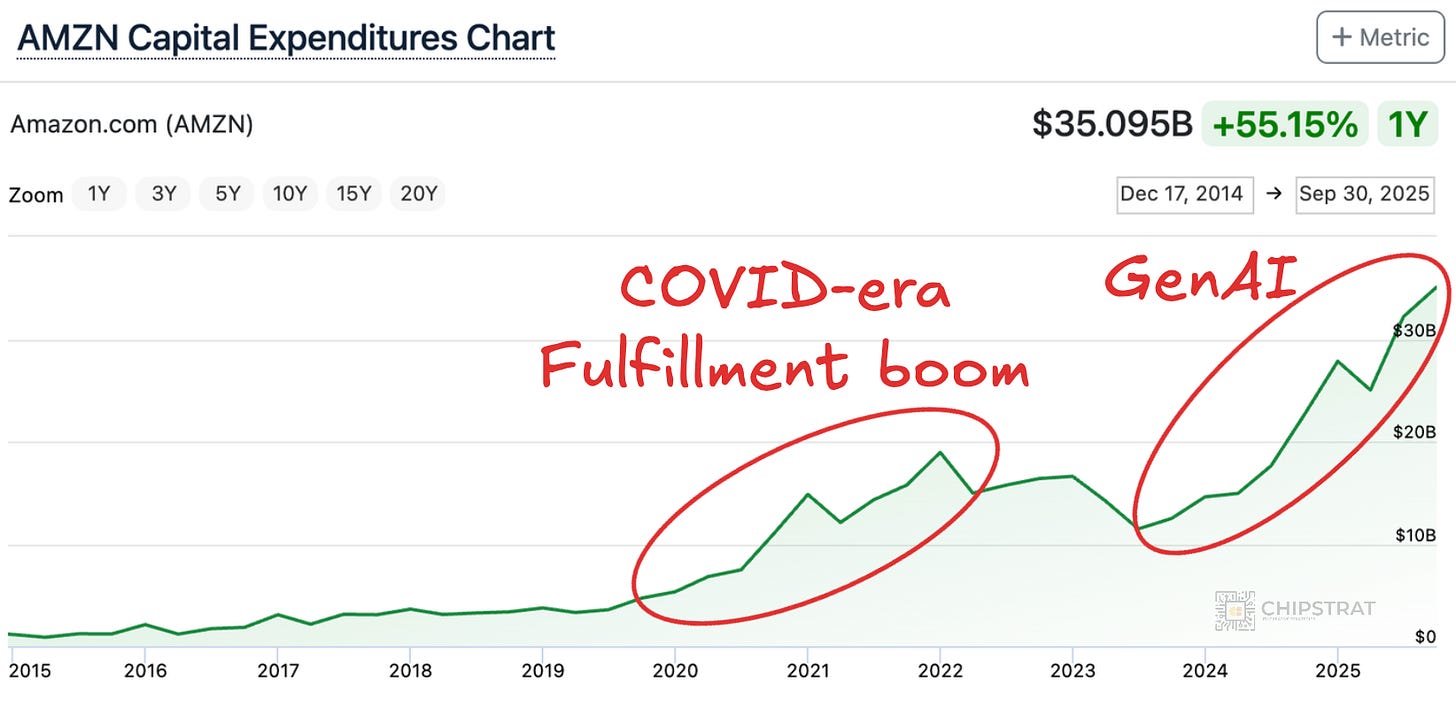

Amazon

Amazon is pouring record amounts into AI infrastructure. Like Google, that spend includes custom silicon (Trainium for AWS, TPU for Google) and the massive data centers to run them (e.g. Project Rainier).

CFO Brian Olsavsky: Now turning to our cash CapEx, which was $34.2 billion in Q3. We’ve now spent $89.9 billion so far this year. This primarily relates to AWS as we invest to support demand for our AI and core services and in custom silicon, like Trainium as well as tech infrastructure to support our North America and international segments.

Of course, Amazon’s total CapEx is bigger than everyone else because it also covers retail logistics (warehouses, delivery fleets, robotic, etc).

But even that’s starting to look like datacenter spend: vast buildings packed with high-value machinery running accelerated compute.

Of course, some of this accelerated compute will be mobile, from traditional bots:

To humanoid ones:

Back to stationary AI compute investments:

BO: We’ll continue to make significant investments, especially in AI, as we believe it to be a massive opportunity with the potential for strong returns on invested capital over the long term…Looking ahead, we expect our full year cash CapEx to be approximately $125 billion in 2025, and we expect that amount will increase in 2026.

$125B in a single year, and increasing in 2026. So far we’re 2 for 2 on record CapEx in 2025 with plans for new highs in 2026…

Like Google, Amazon’s quarterly CapEx has skyrocketed since late 2023, but the jump looks less dramatic because Amazon was already spending heavily. Its CapEx broke $10 billion per quarter during the post-COVID logistics buildout, when it doubled fulfillment capacity worldwide:

CFO Brian Olsavsky is already very familiar with the depreciation game, investing in massive buildings that depreciate over longer periods and full of equipment that depreciates over 5-7 years:

When the world’s biggest store starts selling intelligence too, you start to realize how prescient Brad Stone was with the title of the Bezos biography “The Everything Store”.

A quick aside: an interesting question is which inventory will be ultimately more profitable to move, physical goods or digital tokens?

On the one hand, AWS manufactures and sells the tokens it produces, unlike retail, where Amazon just moves others’ goods.

On the other hand, a token is a token; whether generated on Trainium, Instinct, or Blackwell, the output is fungible. Cost structure matters, sure, but the final product is a commodity.

So even if AWS mints tokens more efficiently, the real question in the fullness of time: what kind of margin can you earn on a commodity?

I’m very familiar with another Team Green vs Team Red when it comes to harvesting a commodity:

And in the production of that commodity (corn and soybeans), it’s worth noting that the equipment makers (tech companies) have much better margins than those producing the commodity (farmers).

Which makes the case for Amazon to continue investing in Trainium; if they’re ultimately producing and selling a commodity, then cost structure is everything. And if you’re going to be one of the world’s largest token farmers, then it makes a ton of sense to design and build your own tractors.

Taking this aside one level deeper: the United States’ largest sugarcane producer US Sugar built it’s own rail system to transport it’s commodity efficiently and bring production costs down:

Amazing drone video, great song choice too lol (Pour Some Sugar On Me).

If you use your imagination, this is sorta like a hyperscaler creating it’s own networking to more efficiently move around it’s commodity (tokens).

Kind of reminds me of AWS’ Elastic Network Adapter custom front-end network.

Microsoft

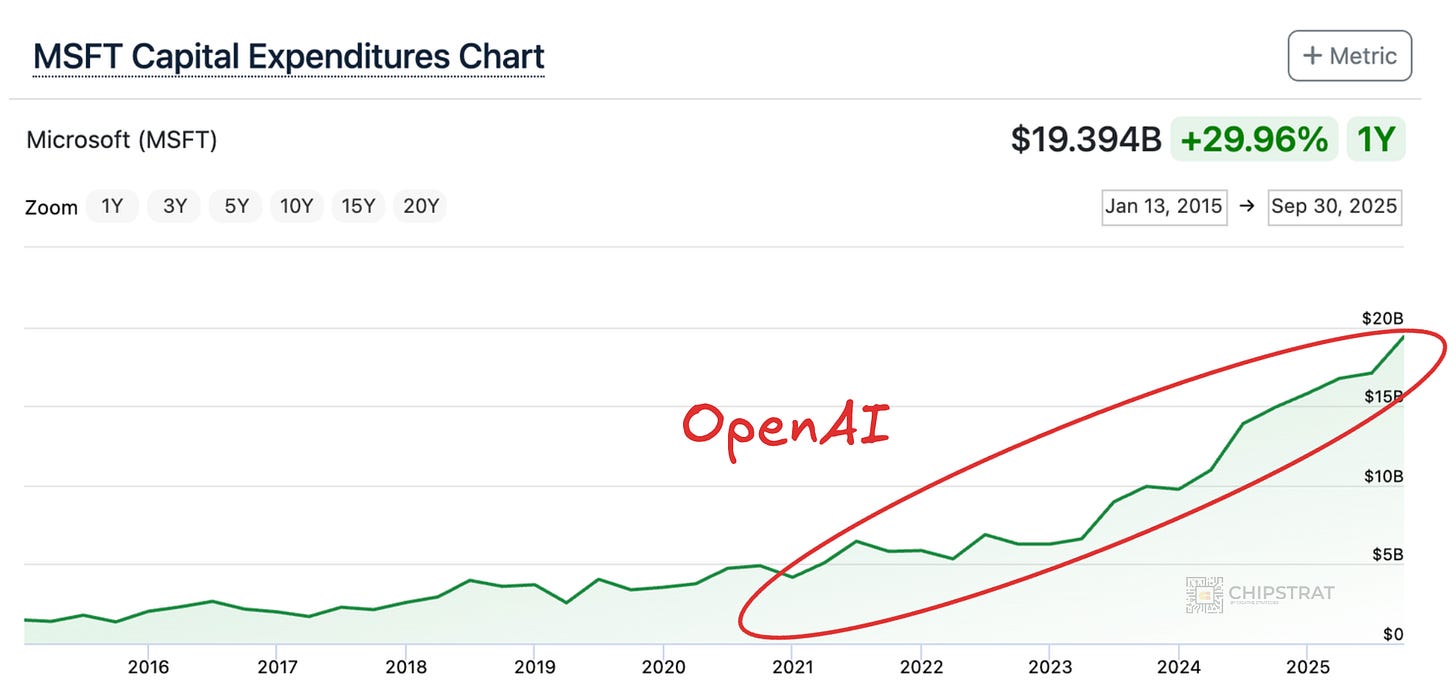

Hmm let’s see, any guesses from MSFT? Accelerating CapEx, expecting even more in 2026?

CFO Amy Hood: Next, capital expenditures. With accelerating demand and a growing RPO balance, we’re increasing our spend on GPUs and CPUs. Therefore, total spend will increase sequentially, and we now expect the FY ‘26 growth rate to be higher than FY ‘25.

You guessed it. The a-word.

To Microsoft’s credit, it’s the first-mover on AI CapEx, going way back before the Nov 2022 ChatGPT launch.

This traces it’s roots back to this 2019 OpenAI + Microsoft announcement:

The companies will focus on building a computational platform in Azure of unprecedented scale, which will train and run increasingly advanced AI models, include hardware technologies that build on Microsoft’s supercomputing technology…

This was obviously the right bet, and is simultaneously

Why investors continue to give Microsoft the green light to increase CapEx

Why investors are concerned about the Microsoft/OpenAI partnership and the ill-defined concept of AGI

On the latter, the new OpenAI & Microsoft partnership gives some clarity, but still has the AGI wildcard, namely that the agreement runs until a set date or until AGI is declared.

To that end, CEO Satya Nadella downplayed that outcome on the earnings call:

Keith Weiss, Morgan Stanley: When we were thinking about this company a year ago or 5 years ago, 111% in Commercial bookings growth was not on anybody’s bingo card… yet the stock is underperforming in the broader market. And the question I have is kind of getting at the zeitgeist that I think is weighing on the stock. And is something about to change?

hint hint: will “AGI be declared” soon?

KW: AGI is kind of a nomenclature or a shorthand for that. And it’s something that still included in your guys’ OpenAI agreement. So Satya, when we think about AGI… is there anything that you see on the horizon, whether it’s AGI or something else, that could potentially change what appears to be a really strong positioning for Microsoft in the marketplace today? Where that strength will perhaps weaken on a go-forward basis?

Satya had to tiptoe around it for a few minutes before getting to the heart of it.

CEO Satya Nadella: I don’t think AGI as defined at least by us in our contract is ever going to be achieved anytime soon. But I do believe we can drive a lot of value for customers with advances in AI models by building these systems.

That seems reassuring to investors. Of course Sam doesn’t agree, but on the BG2 interview he did explicitly say that OpenAI would continue to work with Microsoft even post AGI declaration:

Brad Gerstner: Satya, you said on yesterday’s earning call that nobody’s even close to getting to AGI and you don’t expect it to happen anytime soon… Sam, I’ve heard you perhaps sound a little bit more bullish on when we might get to AGI. So, I guess the question is to you both. Do you worry that over the next two or three years we’re going to end up having to call in the jury to effectively make a uh a call on whether or not we’ve hit AGI?

Props to Brad for bringing investors’ concerns into the conversation.

Sam Altman: I expect that the technology will take several surprising twists and turns and we will continue to be good partners to each other and figure out what makes sense… to say the obvious if we had super intelligence tomorrow, we would still want Microsoft’s help getting this product out into people’s hands

Positive sentiment for sure, but of course Sam didn’t say OpenAI would exclusively want Microsoft’s help.

Case in point, yesterday’s OpenAI announcement with AWS, Microsoft’s cloud rival:

Under this new $38 billion agreement, which will have continued growth over the next seven years, OpenAI is accessing AWS compute comprising hundreds of thousands of state-of-the-art NVIDIA GPUs, with the ability to expand to tens of millions of CPUs to rapidly scale agentic workloads.

Oh, we just broke up and you already have a new girlfriend? That was fast… like you were already talking before we even broke up…

Note that it’s Nvidia GPUs, not Trainium.

But the real interesting nugget here is the mention of all the CPU compute that will be needed to scale agentic workloads. Tens of millions of CPUs! They didn’t have to include that bit if they didn’t think it was probable…

That implies CPU server TAM will increase.

This is precisely what I said nearly a year ago:

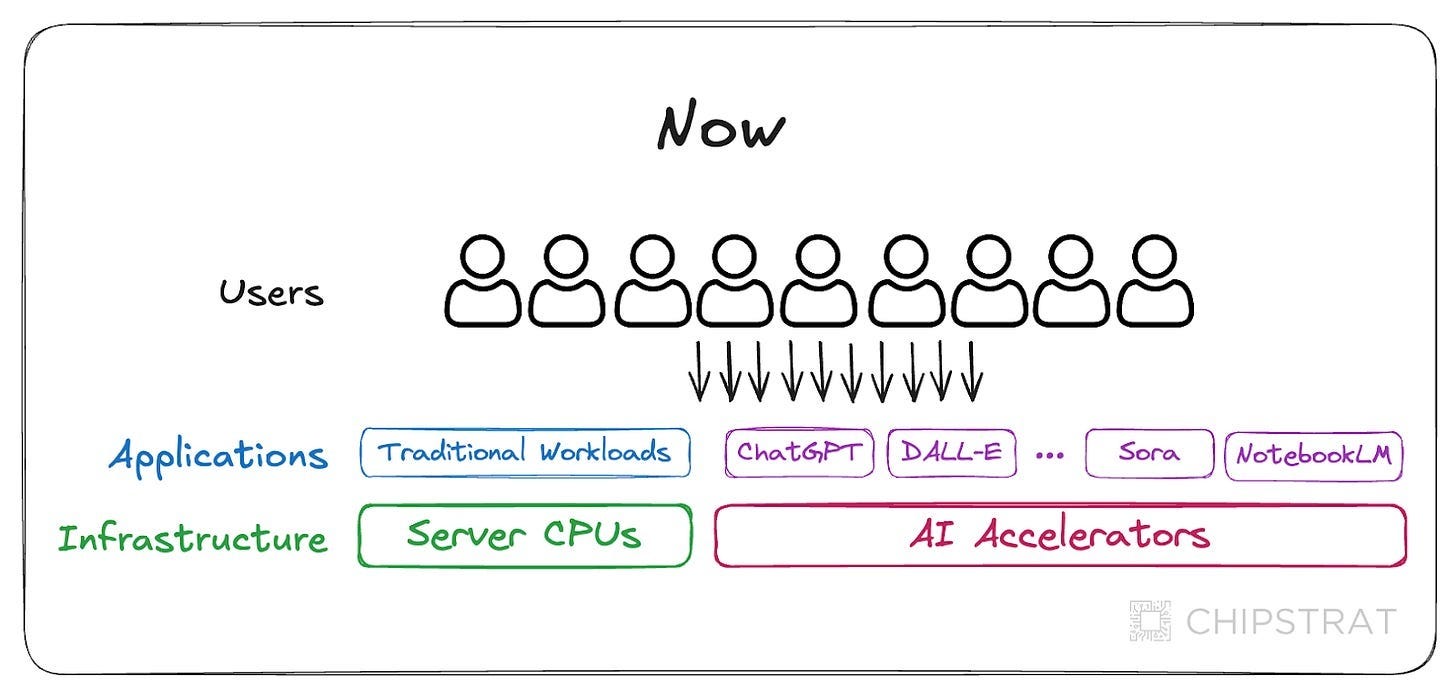

Over the past two years, we’ve witnessed the rise of generative AI-powered workloads running on AI accelerators like Nvidia GPUs and Google TPUs. These applications are being used in addition to the aforementioned traditional workloads.

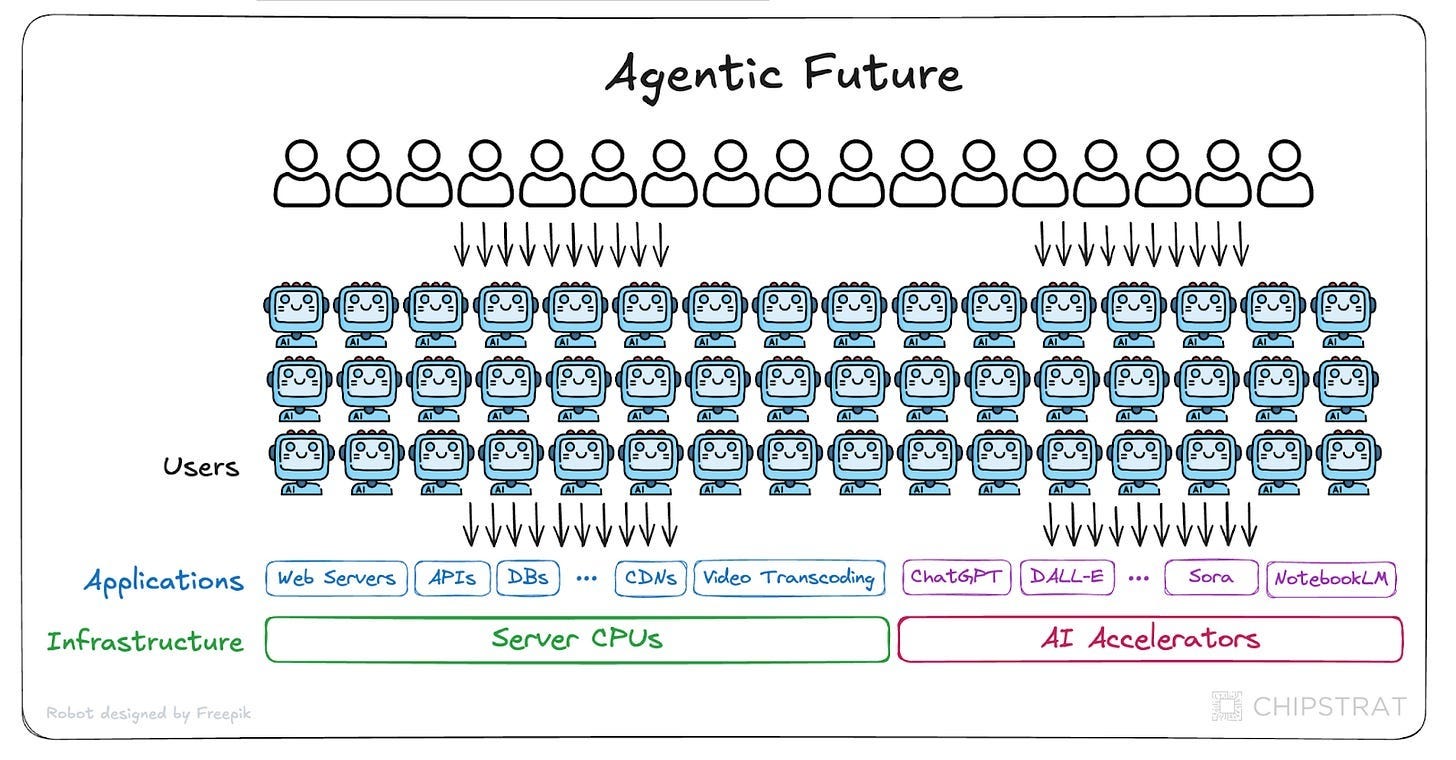

However, in the coming agentic era, a single person may have many agents working for them. So we’ll see an influx of compute consumption from agents:

Sure, GPUs power the agents, but these agents will do a lot of traditional jobs on CPUs. Think of this as having access to many interns at your bidding.

If I had interns, I would do a lot more work! I could and should do a lot of spreadsheet-based analysis to support my decision-making, but I don’t have the time. However, with agents, just like interns, I could give high-level directives and send them off.

What’s the implication for the semiconductor industry? Access to more workers means more traditional work gets done, which increases the load on conventional and accelerated infrastructure.

This is a bull case for CPU-server TAM expansion!

By the way… which CPUs are those agents going to run on? Maybe x86. Maybe AWS Graviton.

If I’m Intel Foundry, I’d be on the phone with Matt Garman asking how I can help produce an American-made-and-packaged Graviton in Arizona and New Mexico. Could get it to him sometime in 2027, just in time for the actual year of the agents.

With the rise of agentic AI tooling from OpenAI (AgentKit) and Microsoft (Agent Framework) don’t be surprised to learn that CPUs are increasingly in demand and in the mix in Microsoft’s future CapEx plans.

Just remember that there’s a diffusion process between when a technology is launched and when it’s adopted, so CPU TAM isn’t going to spike soon.

Meta

This horsemen isn’t like the others. No cloud.

Just Coors, personality, and flair.

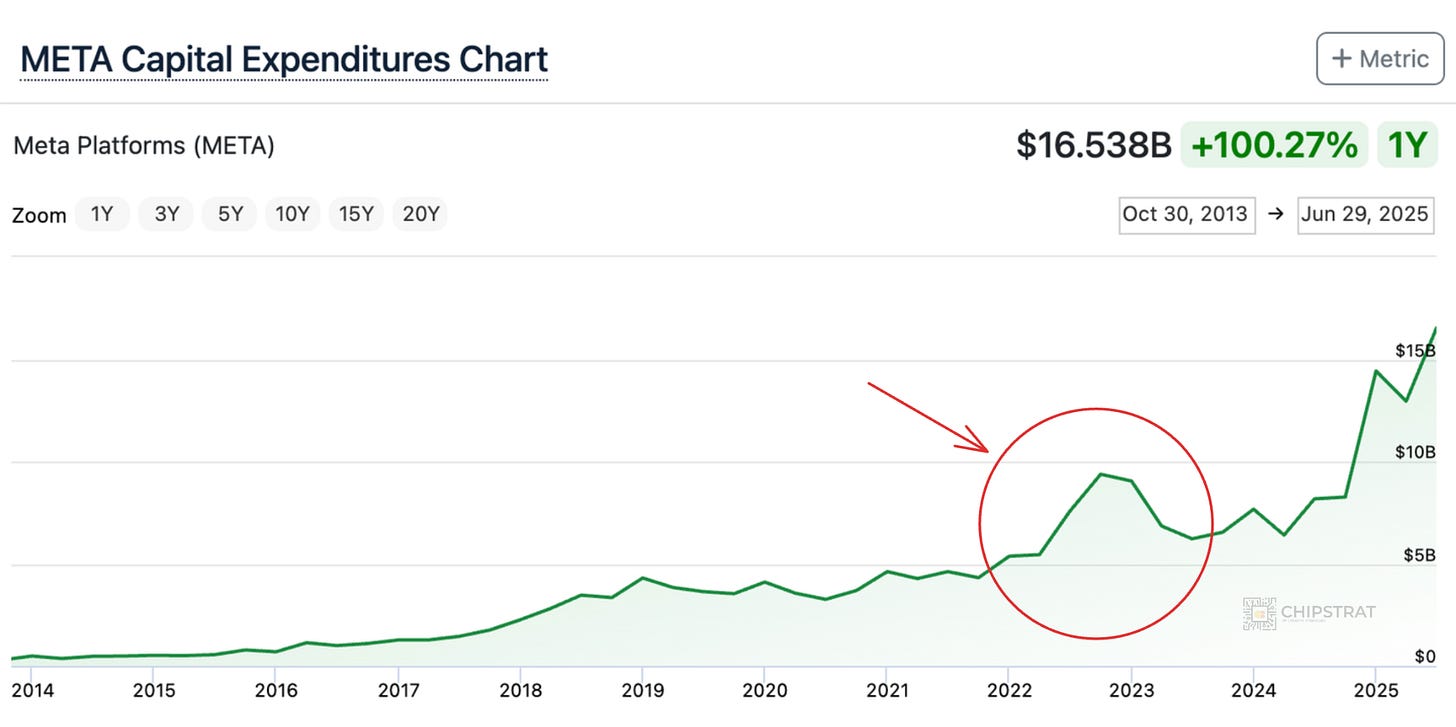

The strategy is different as we’ll see, but the tactics look the same; CapEx up and to the right.

CFO Susan Li: We currently expect 2025 capital expenditures, including principal payments on finance leases to be in the range of $70 billion to $72 billion, increased from our prior outlook of $66 billion to $72 billion… We are still working through our capacity plans for next year, but we expect to invest aggressively to meet these needs, both by building our own infrastructure and contracting with third-party cloud providers.

Zuck’s said the a-word too.

Mark Zuckerberg: But the right thing to do is to try to accelerate this to make sure that we have the compute that we need, both for the AI research and new things that we’re doing and to try to get to a different state on our compute stance on the core business.

Which leads to the ROI question.

If Meta isn’t turning around and renting the infrastructure out, it’s going to take a lot longer to generate a return right?

This is the main reason Meta’s stock is down 16% in the past five days.

The other horsemen have a direct line of sight to ROIC on at least some of the infrastructure; mark it up, rent it out.

But investors aren’t so sure about Meta, which has to turn that infrastructure into intelligence tokens and use them in such a way that drives up the top line of its core business, ads.

Zuckerberg argues that he too can quickly turn some of the compute into ROI, not by renting it out but by allocating internally to existing ML models powering recommendations and ads. No GenAI needed.

CEO Mark Zuckerberg: I think that it’s the right strategy to aggressively frontload building capacity so that way we’re prepared for the most optimistic cases.

That way, if superintelligence arrives sooner, we will be ideally positioned for a generational paradigm shift in many large opportunities. If it takes longer, then we’ll use the extra compute to accelerate our core business which continues to be able to profitably use much more compute than we’ve been able to throw at it.

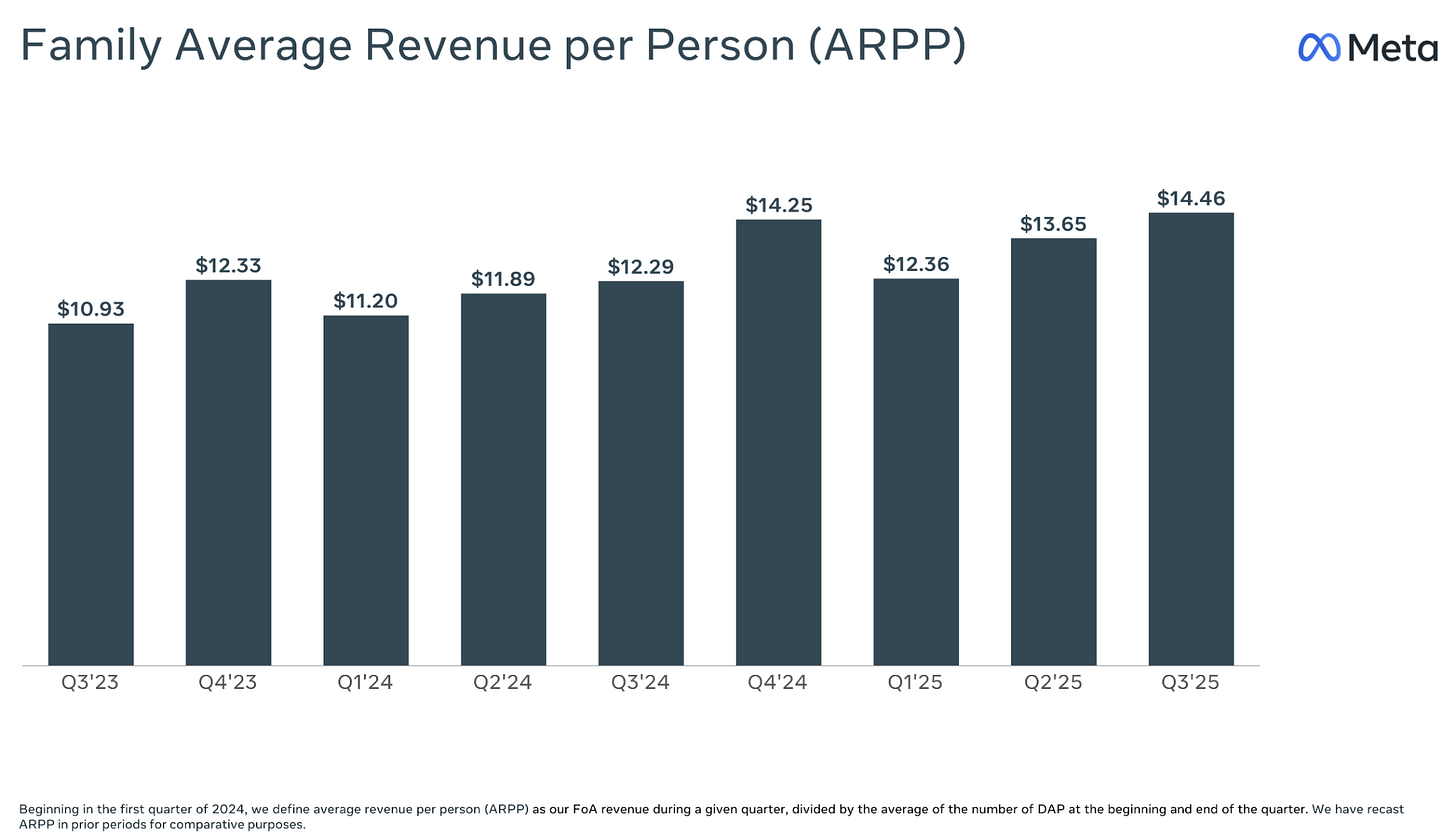

He’s absolutely right about that too. Meta advertising revenue is at all-time highs.

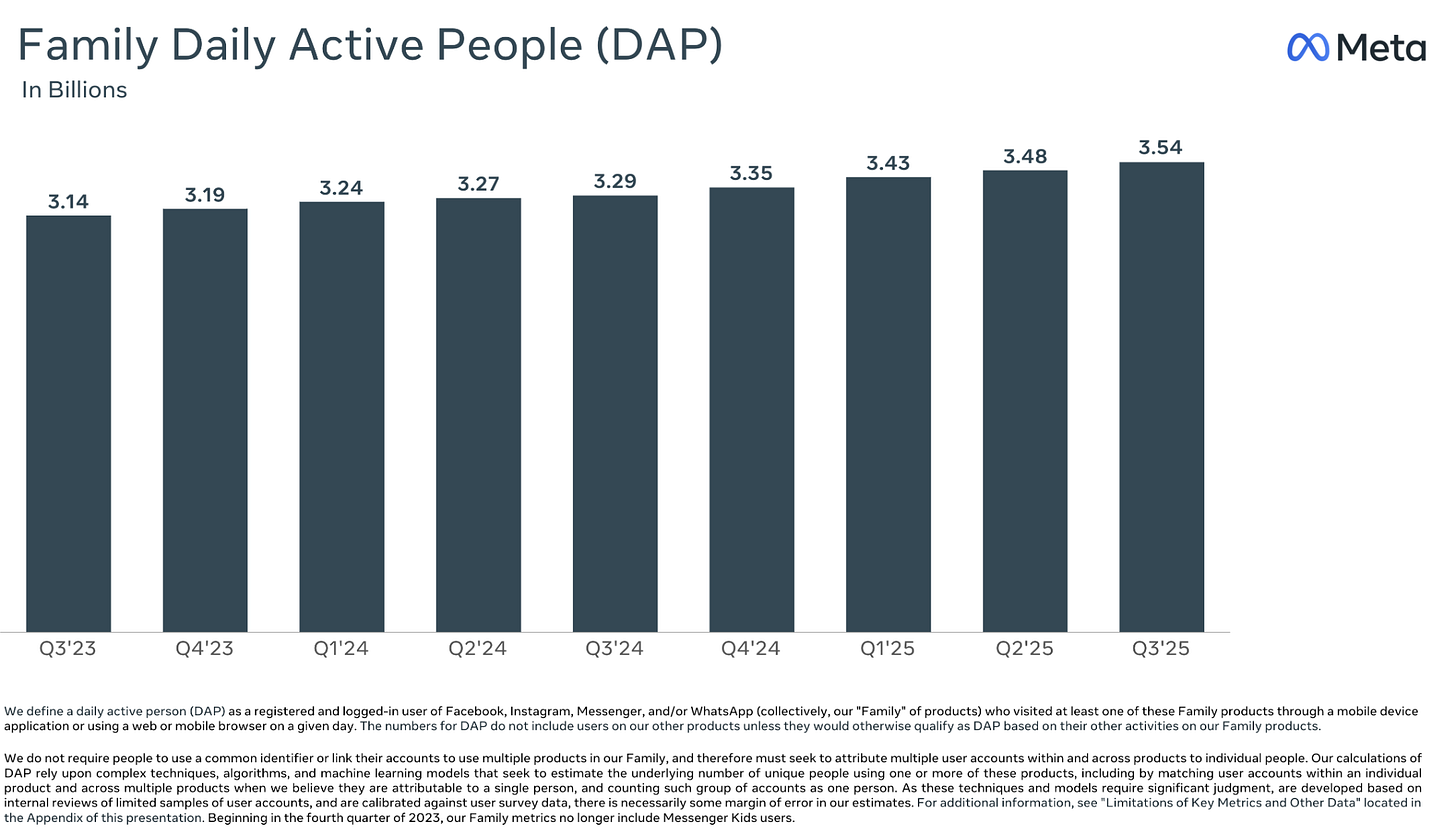

And wouldn’t others (cough cough OpenAI) LOVE to have 3.5B DAILY active users… and …. Wait for it… make almost $60 PER USER PER YEAR!

Dude.

This is why advertising is the right revenue model for OAI.

3.5B people on the planet are NOT going to pay $60/year on average for ChatGPT.

But you are dang right that businesses worldwide will bid to show their wares to each and every one of those users, and it can accumulate to $60 per person.

Back to Zuck, revenues are up based on the back of traditional ML models. I’m very bullish on GenAI improving advertising even more, as I explained here back in July:

My two cents: any AI compute thrown at traditional ML can generate very quick returns for Meta, and efforts to use GenAI to improve ads and recommendations further will also pay off in due time just like Zuck said. But how long is “due time”?

In the even longer run, GenAI will create more opportunities for Meta to show ads to users. Maybe that’s new products like Vibes. How long though?

Or even placement opportunities from Meta’s AR/VR efforts. Oh… no.. you went there.

Dude. I’m most bullish on Meta’s ability to monetize this AI infrastructure investment because they’ve already run this playbook! Investors are overlooking this!

Meta invested heavily in AI compute three years ago, which is why precisely why today’s earnings are so good:

From Meta’s Q3 2022 earnings call way back in Oct 2022 before ChatGPT.

CFO David Wehner: Before turning to our CapEx outlook, I’d like to provide some context on our infrastructure investment approach. We are currently going through an investment cycle, which is being driven -- which is primarily driven by 2 large areas of investment. First, we are significantly expanding our AI capacity. These investments are driving substantially all of our capital expenditure growth in 2023. There is some increased capital intensity that comes with moving more of our infrastructure to AI. It requires more expensive servers and networking equipment, and we are building new data centers specifically equipped to support next-generation AI hardware. We expect these investments to provide us a technology advantage and unlock meaningful improvements across many of our key initiatives, including Feed, Reels and Ads.

We are carefully evaluating the return we achieved from these investments, which will inform the scale of our AI investment beyond 2023. Second, we are making ongoing investments in our data center footprint. In recent years, we have stepped up our investment in bringing more data center capacity online. And that work is ongoing in 2023. We believe the additional data center capacity will provide us greater flexibility with the types of servers we purchase and allow us to use them for longer, which we expect to generate greater cost efficiencies over time.

Compute and data centers. Sounds a lot like today’s earnings calls!

At the time, the investment in AI infra it was to overcome ATT (see Stratechery for more). And it worked and is still paying dividends today, as revenue is not yet significantly impacted by Generative AI.

Meta has already shown it can build AI infrastructure and utilize it to drive increased top line revenue.

But I understand why investors are not seeing this. Reality Labs is obscuring their vision. Rightly so. That was a huge investment too, and the time-to-payoff is much much longer. As in, it currently sits at infinity years to pay off. Maybe 2031, 2032? Bullish on AR.

And, in all fairness, the whole “AGI” thing feels as fuzzy and futuristic as Reality Labs, and so the vibes are bad.

If Mark or Susan are reading this: you should keep leaning into the narrative that you’ve already proven you can turn tokens into top line revenue!!

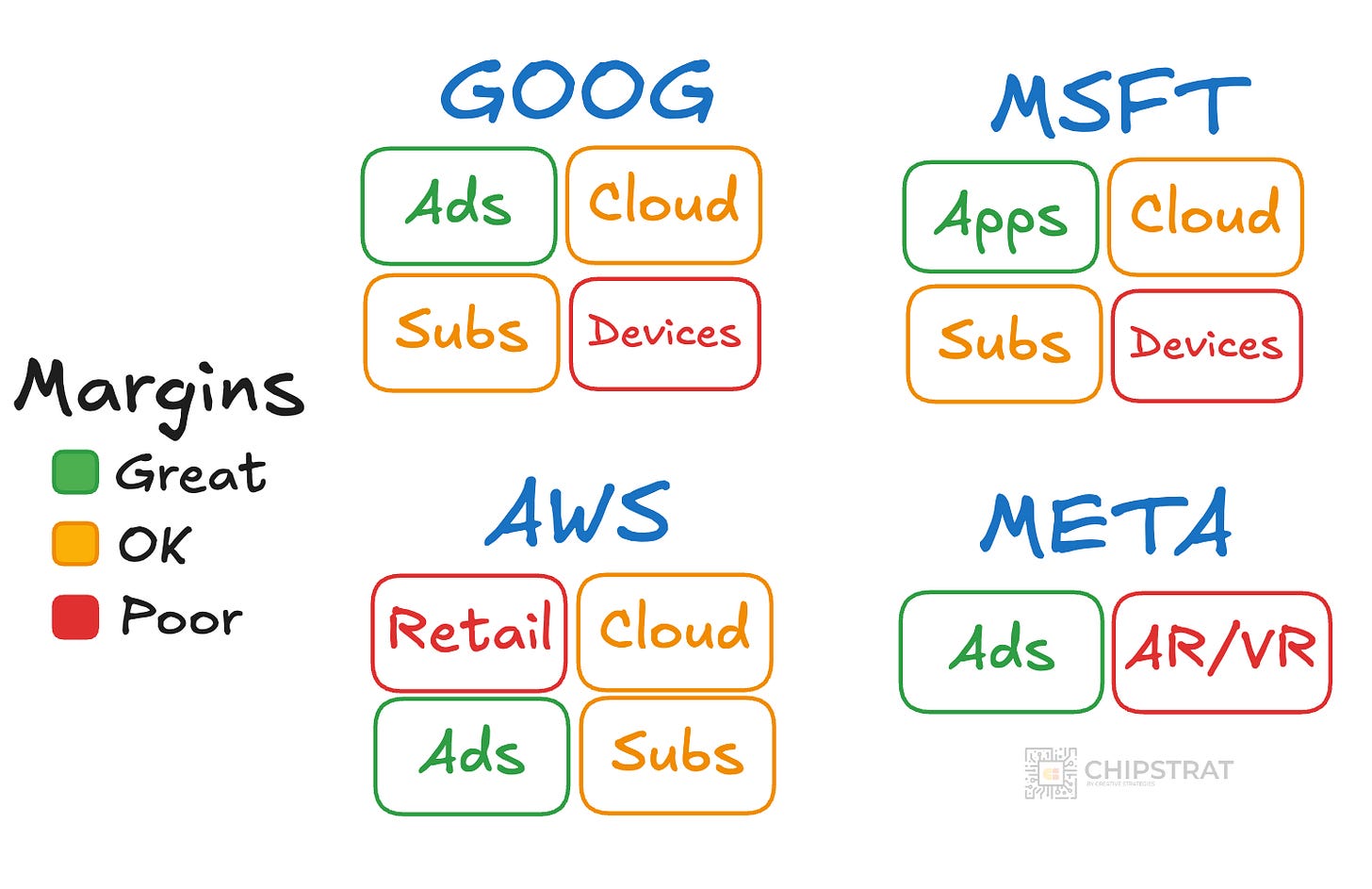

ROIC

Meta is down after earnings. The other horsemen are flat or up.

Investors feel more confident about quick returns (cloud).

But, the margins on those quick returns are only OK.

Margins on advertising are great. Cloud is ok.

So investors are prioritizing quick, safe returns over a longer, riskier bet but with higher margins.

Let’s look at where the horsemen are investing their AI compute and what the payoff might be in both the short and long term.

Google

Google is in a good spot. It can turn some AI infrastructure into quick returns with the cloud ($), but also use GenAI to increase the effectiveness of their advertising in the long run ($$$). So make some pennies now, and dimes later.

BuT sEaRcH wIlL bE cAnNaBaLiZeD!!! Dimes aren’t guaranteed!

Between Google’s insane consumer reach (Search, Maps, Gmail, Chrome, Android, Docs, etc) and its growing lineup of models and tools (NanoBanana, Gemini, Veo, Genie, etc) I am not worried about it.

Oh yeah, plus Sundar said Search engagement is up.

Sundar Pichai: As people learn what they can do with our new AI experiences, they’re increasingly coming back to Search more. Search and its AI experiences are built to highlight the web, sending billions of clicks to sites every day. During the Q2 call, we shared that overall queries and commercial queries continue to grow year-over-year. This growth rate increased in Q3, largely driven by our AI investments in Search, most notably AI Overviews and AI Mode. Let me dive into the momentum we are seeing. As we have shared before, AI Overviews drive meaningful query growth. This effect was even stronger in Q3 as users continue to learn that Google can answer more of their questions, and it’s particularly encouraging to see the effect was more pronounced with younger people. We’re also seeing that AI Mode is resonating well with users. In the U.S., we have seen strong and consistent week-over-week growth in usage since launch and queries doubled over the quarter. Over the last quarter, we rolled out AI Mode globally across 40 languages in record time. It now has over 75 million daily active users, and we shipped over 100 improvements to the product in Q3, an incredibly fast pace. Most importantly, AI Mode is already driving incremental total query growth for Search.

And let’s revisit how advertising works:

Own attention

Sell ads and profit

Google is just fine.

Microsoft

Microsoft also allocates a lot of its AI infrastructure investments to cloud. Quick penny.

MSFT is also working hard to integrate AI into productivity apps / subscriptions. The payoff is much less clear than throwing compute at advertising.

Will GenAI increase Microsoft Office’s value or stickiness? Not so sure. GitHub, yes.

So with these bets, the expected value is lower IMO than Google’s bets.

AWS

Investors are excited that demand from customers for AI rentals is accelerating, including for Trainium.

And hey, cloud rentals margins aren’t great compared to advertising, but are AMAZING compared to Amazon’s retail business.

Shoot, Trainium rentals might even lift cloud margins if AWS keeps a little cut of the savings while passing on the rest to the customer.

But choosing Trainium requires porting software, and being OK with less than leading FLOPS, memory bandwidth, and network bandwidth. Yet some mega customers seem to be willing to deal with that for better TCO. After all, these customers are renting compute by the hour, not paying by the token. Which simply is to say some folks are thinking about budget allocation in terms of “I can spend $100M this year, how much compute can I rent with that?”

If AWS is listening, they ought to allocate some of that AI capacity to further increase advertising revenue given it’s incredible margins. I’m assuming they already are, and if so they should tell that story to investors.

Meta

Bearish on Meta, right? Not so fast.

The other three horsemen are investing compute into quick returns with smaller gross profit dollars (cloud). It’s a safe investment in OK businesses.

But Meta is different. It doesn’t have OK businesses. It just has a GREAT business (ads), and a NEGATIVE business (Reality Labs).

And Zuck is betting ALL OF THE COMPUTE on the GREAT business!

You follow?

Everyone else is making safe bets with OK returns, whereas Zuck is all in on high-margin ad revenue.

And we know that the old ML models can already produce quick returns with extra compute. So the time horizon there isn’t on the order of Reality Labs, and arguably the GenAI enabled ad opportunities are not too far away either.

Zuckerberg is making a great bet. Don’t waste the compute picking up pennies. Go after dimes!

As Rick Flair says:

Whether you like it or don’t like it, learn to love it, because it’s the best thing going today! Woooooooo!

Yes, Google is a safe bet. But Meta’s strategy makes sense too.

Learn to love it!

Nvidia & Broadcom

What do earnings from the Four Horsemen mean for chip makers?

CapEx growth is good for Nvidia. Don’t forget, Nvidia growth is ultimately limited by TSMC supply…

But surprising external demand for the TPU… what does this mean for Broadcom?