WWDC25: Apple’s Playing a Different Game

The strategy makes sense. The narrative is missing. Let’s examine Apple’s AI approach from first principles and assess whether WWDC delivered.

Before WWDC, Bloomberg said Apple’s AI efforts need to “catch up to leaders like OpenAI and Google.”

This assumes Apple is running the same AI race as OpenAI and Google. Is it?

And after WWDC, The Wall Street Journal declared that “Apple fails to clear a low bar on AI”.

This implies a clear standard that Apple failed to meet. But is Apple’s AI goal clear to observers?

Forget the Apple Intelligence marketing debacle. Let’s reset and start from first principles to decide for ourselves.

What AI race is Apple actually running?

What did WWDC reveal about Apple’s AI progress?

Let’s find out.

First Principles

We’ll start with observations about Apple as context for their AI strategy. Yes, these observations seem self-evident, but then again, people are comparing Apple’s AI efforts to OpenAI, so…

Business Model

Show me the incentives, and I will show you the outcome. —Charlie Munger

If you want to understand why a company does what it does, look at what it’s rewarded for.

In Apple’s case, it starts with customers willingly paying a premium for consumer hardware, especially the iPhone. Most everything else, from Services to accessories like AirPods and Apple Watch, gets pulled along by the strength of the iPhone.

Yes, Services revenue is big, but it grows because customers love the hardware. The better the experience across Apple’s family of devices, the more people stick around, and the more they spend with Apple over time.

Customers

Apple has over 2 billion active devices, likely used by close to a billion people. That means many hundreds of millions of people interact with Apple products every day. The majority of customers have iPhones, which are checked dozens or even hundreds of times daily, so the scale of daily touchpoints is nearly incomprehensible. Kajillions of Apple-powered user experiences are woven into daily routines worldwide.

And don’t forget — these are premium, loyal users.

Core Competencies

When a device becomes an extension of you, it must be frictionless. Apple earned that role for billions of people by obsessing over the whole product, from silicon to software to design, to deliver frictionless experiences.

And increasingly, those experiences run on traditional machine learning and AI under the hood. Why type in a password dozens of times a day when a glance will do? Face ID to the rescue! Face ID runs computer vision ML (convolutional neural networks), with the singular goal of reducing friction a kajillion times per day.

We discussed ConvNets for computer vision previously here, check it out:

As Apple’s ML Research team explained in 2017:

Apple started using deep learning for face detection in iOS 10. With the release of the Vision framework, developers can now use this technology and many other computer vision algorithms in their apps. We faced significant challenges in developing the framework so that we could preserve user privacy and run efficiently on-device.

Apple has the wherewithal to build world-class AI that runs locally on its world-class consumer hardware devices. hint hint

Developer Platform

Apple’s first-party apps like Phone, Messages, Finder, and Settings ensure that users have a polished experience for core tasks. They also set the tone, demonstrating Apple’s design ethos and teaching users (and third-party developers) what good looks and feels like.

But Apple’s third-party apps are even more important. The iPhone’s early breakout success was as much about the App Store as anything else!

Apple’s App Store ecosystem is a massive two-sided marketplace. Developers come for the scale; a billion users means even niche interests have huge audiences. Birdwatching? Stargazing? Quilting? Come build it, your users are here.

And users come for this long-tail of apps.

Apple’s dev platform is one of its most important and still under appreciated advantages. Any AI aspirations Apple has must treat third-party developers as first-class citizens.

The Right AI Strategy for Apple

So, given these observations, what should Apple’s AI strategy be?

Everyone else is fixated on asking whether Apple should be running the foundation model race. But that’s the wrong question. The real question is:

What makes a great personal computing device in a GenAI world?

What new experiences are now possible on a phone, laptop, watch, etc?

What needs to be invented, adapted, or rethought to make these experiences real?

And how can developers be empowered to build them?

The right AI strategy for Apple is to the same one its always had: leverage its core strengths to build the best personal computing user experiences. Include GenAI to improve everyday tasks for millions of people, within the workflows and apps they already use, on the hardware they already have or will soon buy.

Do that, and put it in a billion hands.

What AI Apple Delivered at WWDC 2025

Did WWDC 2025 show Apple is serious about leading in AI-native personal computing? Let’s find out.

Core Experiences Enhanced With AI

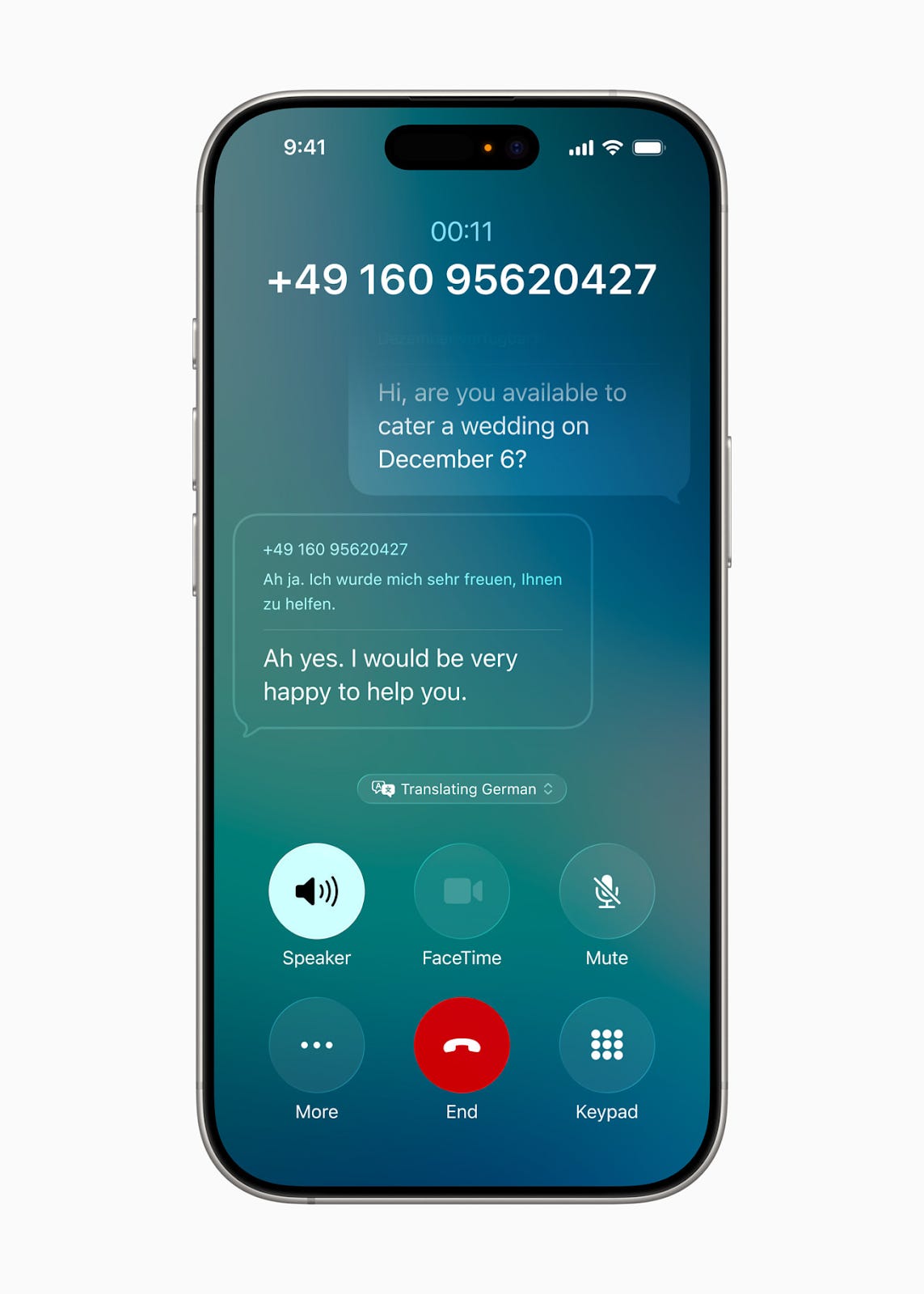

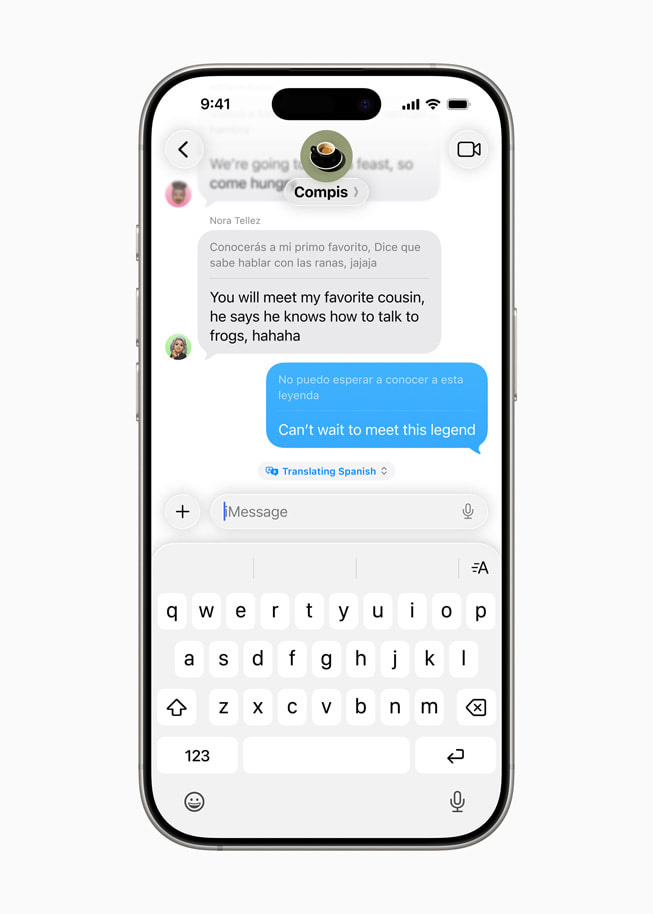

My first observation is that Apple showed that on-device GenAI is both feasible and useful by integrating it directly into core apps. Take Live Translation, for example:

For those moments when a language barrier gets in the way, Live Translation can help users communicate across languages when messaging or speaking. The experience is integrated into Messages, FaceTime, and Phone, and enabled by Apple-built models that run entirely on device, so users’ personal conversations stay personal.

On-device. Useful. Fast enough. Is it a game-changer for me? No, sadly (I wish I had more spoken languages in my life!) But with a billion users, many in multilingual families or neighborhoods, it’ll be a real benefit to many.

Why stop at text and audio? Let the input tokens be visual! This feature looks useful:

Visual intelligence also recognizes when a user is looking at an event and suggests adding it to their calendar. Apple Intelligence then extracts the date, time, and location to prepopulate these key details into an event.

This is a real use case for me! A kid brings home a flyer from school, I snap a photo, jump into Gemini, and say: “Add this to my Google Calendar.” Or I get a travel itinerary in a PDF, screenshot it, and ask AI to turn it into a calendar event.

Now Apple promises makes that same task even easier. I can take a picture or screenshot, and my iPhone suggests creating a calendar event, right inside the UI I’m already using. No app-hopping; just a simple, native action.

Notice the pattern? Apple is weaving AI into the flow of everyday interactions, not redirecting them to a separate destination. Which means these upgrades don’t announce themselves as GenAI, but simply feel like an upgraded UX. Most people won’t even realize AI is involved, and that’s just fine.

But what if I do need general world knowledge throughout the day? I often use my iPhone and ChatGPT together, e.g. a screenshot of something technical or a photo of a book I’m reading and ask for a deeper explanation. Apple has a path for that too. Visual intelligence now lets users ask ChatGPT about whatever is on their screen.

Visual intelligence already helps users learn about objects and places around them using their iPhone camera, and it now enables users to do more, faster, with the content on their iPhone screen. Users can ask ChatGPT questions about what they’re looking at on their screen to learn more, as well as search Google, Etsy, or other supported apps to find similar images and products. If there’s an object a user is especially interested in, like a lamp, they can highlight it to search for that specific item or similar objects online.

No switching apps, no copy-paste, just tap and ask.

Do I care that this step goes to ChatGPT and not a local model? In a perfect world, I’d avoid sending personal data to an AI lab eager for more training data. But that’s not a problem I expect my hardware company to solve. I already rely on ChatGPT for general knowledge, knowing the tradeoffs. What I want from Apple is simple: make it seamless to ask for help, right in the flow of how I already use my phone.

And judging by these WWDC examples, Apple’s is working on just that, showing AI can be used to

do useful things

for a lot of people

within their existing workflows

on their existing devices

This perfectly aligns with Apple’s business model too: I pay a premium, you give me the best experience (least friction!) possible.

Everything we’ve seen so far aligns with our first principles observations…. but what about 3rd-party developers? Remember the conclusion we drew earlier?

Any AI aspirations Apple has must treat third-party developers as first-class citizens.

WWDC delivered on this front too.

Platform Enablement

If Apple wants to lead in AI-native personal computing, it can’t stop at building first-party apps. It must make generative AI a core capability for the entire developer ecosystem. WWDC 2025 showed that’s exactly what it’s doing. And it’s more important and more overlooked than most people realize.

Foundation Models Framework

Apple nailed it, introducing a Foundation Models framework that exposes its on-device large language model, the same one powering Apple Intelligence, directly to developers. This framework is deeply integrated with Swift, Apple’s programming language, and is designed for tight OS-level coordination. Developers can tap into it with as few as three lines of code. As explained in this blog post,

We also introduced the new Foundation Models framework, which gives app developers direct access to the on-device foundation language model at the core of Apple Intelligence…. Our latest foundation models are optimized to run efficiently on Apple silicon, and include a compact, approximately 3-billion-parameter model, alongside a mixture-of-experts server-based model with a novel architecture tailored for Private Cloud Compute

Apple’s vertically integrated approach marries LLMs to hardware, OS, and developer tooling in a way only Apple can, supporting the following useful features:

BTW there’s a very helpful WWDC Developer session video if you want to learn more: Meet the Foundation Models Framework

Tool calling means the AI can do more than just generate text, but can take action. If the model realizes it needs more information, it can trigger features built by the developer. These actions happen automatically and safely, using only the data on your device.

Streaming outputs let you see results as they’re being created. Instead of waiting for the whole response, apps can show updates in real time, like a message being typed out. This makes apps feel faster.

Adapter-based fine-tuning allows apps to specialize the model for certain tasks. Apple includes basic built-in adapters. Developers can train their own adapters for unique needs.

Session-level instructions and memory help the model stay consistent and remember the flow of a conversation. Developers can set the tone and style up front and that behavior sticks across interactions. The model also keeps track of what’s already been said so it can respond naturally as you’d expect.

Apple is making GenAI a core system capability (like Metal or Core ML) available to every app across its ecosystem. And with over a billion active devices, that makes it one of the most widely accessible GenAI platforms in the world.

ML Team Strategy

In the blog post, Apple’s ML Research team is explicit about the goal:

It is not designed to be a chatbot for general world knowledge.

The goal is to make AI a core platform capability.

There’s an argument that Apple can be thought of as an edge AI infrastructure provider. 🤔

A Cultural Surprise

When diving deep into the weeds, I noticed a surprising cultural shift.

Apple’s ML team is leaning into move fast and break things! Apple the consumer hardware company tends to wait until things are perfect. And even in light of the “announcing Apple Intelligence too early” baggage, the ML team is pushing ahead even if things aren’t perfect for the developer.

We've carefully designed the framework to help app developers get the most of the on-device model. For specialized use cases that require teaching the ~3B model entirely new skills, we also provide a Python toolkit for training rank 32 adapters. Adapters produced by the toolkit are fully compatible with the Foundation Models framework. However, adapters must be retrained with each new version of the base model, so deploying one should be considered for advanced use cases after thoroughly exploring the capabilities of the base model.

Retraining with each new version of the base model is painful, but I love that this didn’t block Apple from shipping it.

The insight is that Apple willing to be imperfect for developers, who are willing to overcome a bit of pain to move faster, sooner. This is strategically sound! Best to get app devs going on GenAI experiences ASAP.

The Apple ML team also highlighted other limitations in this talk, including a context window of only 4,096 tokens, shared between prompt and output.

If you put 4,000 tokens in the prompt, you've got 96 left over for the output

This is quite small. And devs will have to hack to work around it:

If you have an entire book's worth of text, you're not gonna be able to fit that into 4096 tokens, and so you're going to have to do some kind of chunking. You can split it up into windows, and then look at each of those individually. You can also do overlapping windows, so you have some amount of overlap between each one, and you just feed each one of those into a new session. There's techniques like recursive summarization, so you break your book down into, like, five or six chunks, and then you summarize each of those five or six chunks, and you stick those things together, and then you perform some operation over that until you get it into a size that you can work with. And none of these are a bulletproof solution, none of them work in every instance. You're just gonna have to experiment with lots of different approaches and have that eval set on hand so that you can tell which ones are working over a representative distribution of the sorts of things your real users are gonna do with it.

So that’s gonna be pretty painful too. And the local modal isn’t a multimodal model yet, but devs can still hack together multimodality via combined framework usage:

One of the really neat things that you can do is you can take all of the other frameworks that we have, and we can plug them together. So, for example, you can use the new speech analysis framework to caption what a developer or what your users are saying. And if that user says, Hey, make me an image of a panda on a tricycle, you can take the foundation model framework and ask the foundation model framework to rewrite that user's request into an image description, and then you can feed that image description into image playgrounds. And there you go from audio in to image out by plugging it through these three different frameworks…I think, with the availability of the vision and speech and sound, and the image creator Apia, you can string all of these together into nice pipelines.

Again, this is painful, but it’s a good sign because Apple is moving fast. (Of course, it would have been nice if they’d done this last year, but now is the next best time).

Apple’s GenAI platform strategy seems to be: perfection for consumers, pragmatism for developers. The ML team is shipping fast, even if it means devs deal with adapter retrains, token limits, or cobbled-together multimodal pipelines. And that’s the right call. Developers would rather build now and iterate than wait for perfect tools later. For GenAI to take off across Apple’s platforms, speed beats polish for dev tooling.

What about OpenAI?

Apple builds AI-powered devices from the ground up, from silicon to OS to dev tools. They’re competing in the AI-native personal computing race.

Sam & Jony’s io seemingly aspires to enter this race; io is attempting to build a family of AI-first devices from the ground up, designed to make AI feel natural and always available.

But Apple has a monumental head start. io has to build it all from scratch (hardware, software, dev tools) and solve the cold start problem of attracting both users and developers to the platform.

And then there’s the form factor. Will io’s device simply supplement the iPhone and Mac, capping Apple’s growth, perhaps, but not cannibalizing iPhone sales?

Yet the threat of competition is real enough that Apple can’t afford to slow down.

Whether it’s io or someone else, a broader risk is that a purpose-built, AI-native device ends up outshining Apple’s retrofitted approach. Especially if Apple plods along too cautiously, as incumbents often do.

Google?

Google has everything it needs to win in AI: Pixel phones, Android OS, Gemini models, and custom TPUs. On paper, it’s the ultimate full-stack AI company, from edge to cloud.

But in practice, consumer hardware feels like a Mountain View side project. With ad revenue driving the business and search under threat, it’s easy to see why. Still, that makes it hard to believe Google will prioritize AI-native personal computing enough to turn its technical edge into great products.

And even then, it would be in a similar position to Apple, adding GenAI into existing form factors and experiences rather than exploring building a new experience from the ground up like io or other startups.

On the other hand, the phone is probably the central consumer device for the next decade or so anyway, and open ecosystems like Android might be able to harness more developers to innovate faster than Apple. Moreover, Android has billions of users too.

So again, Apple must keep its sense of urgency to stay ahead of upstarts and Google.

Proactive Intelligence

Beyond making existing UX flows simpler, WWDC showcased proactive experiences designed to anticipate your needs without you asking. These “anticipatory” experiences run on-device for a reason, as we discussed recently:

Local AI can unlock user experiences that wouldn’t otherwise be possible, primarily for economic reasons. I call these “anticipatory” experiences. This kind of anticipatory work runs silently in the background, always on. This continuous, anticipatory inference isn’t cost-effective at scale with cloud inference.

A simpler word is “proactive”. Think of things like showing the right app at the right time, suggesting a message reply, or surfacing the right workout. At WWDC, Apple showed how watchOS uses local data like time, location, and routine to make smarter predictions in the Smart Stack.

Smart Stack, the watchOS feature that uses contextual awareness to show shortcuts to the apps you might want to open, is getting features that make it smarter about your surroundings.

This year, the Smart Stack improves its prediction algorithm by fusing on-device data, like points of interest, location, and more, and trends from your daily routine, so it can accurately predict features that could be immediately useful to you.

And Apple Developers can access build proactive features like these in an economically viable way.

Siri, The Elephant in the Room

There’s real value in app-level intelligence, but Apple sold a vision years ago of an intelligent layer on top of these apps. The dream of an assistant that ties it all together? That’s Siri, a promise Apple made years ago and still hasn’t fully delivered. That’s a hard expectation to unwind.

The LLM wave brought renewed optimism. Apple Intelligence looked like a decent start, but it didn’t ship on time and key features are still missing. Some say Apple should just plug in OpenAI or Anthropic and move on. Instead, Apple is staying the course, and according to the following video, the Siri reboot won’t arrive until 2026:

Will the Siri delay matter? Probably not. Apple’s massive installed base gives it room to wait. For incumbents as big as Apple, the risk of getting it wrong might outweigh the reward of being first. It pains me to say this, because my personal modus operandi is to move as fast as possible, *especially* if you’re the incumbent. But the logic goes: A broken Siri rollout could erode trust and frustrate users, leading to churn or lower customer lifetime value (LTV). A great one rollout? It likely won’t flip many Android users, and it’s not clear how Siri increases LTV.

Why won’t amazing Siri convert loads of Android users? It’s not clear voice will become the primary interface on phones, even with a smarter Siri. That could change with glasses—like Meta’s Ray-Bans, Android XR, Meta Orion—but on phones, I’m skeptical. So it’s nice, but it’s just another feature; just because it sits on top of your apps doesn’t make it obvious that it would change your life. Maybe for Apple HomePod though!

So a better phone assistant isn’t going to shift the smartphone market for Apple. But what does move the needle?

The apps people live in. Everyone has their go-to handful of daily use apps. If those apps become more useful or frictionless on Apple devices thanks to on-device AI, that’s what deepens the moat. Not a single headline feature, but a thousand small ones.

Yes, Siri matters. But empowering developers to build those thousand in-app experiences? That matters more.

Paid Subscribers

But what about the race to train massive, cloud-scale foundation models? In the long run, doesn’t Apple need a world-class general knowledge model of its own? We’ll dive into this below.

You might also be wondering: Where does Apple still fall short in the shift to AI-native personal computing? What blind spots or structural weaknesses could hold it back? We’ll dig into all of that too, for paid subscribers.

If you’re looking for a strategy partner, get in touch to explore our consulting work at Creative Strategies. We work across the AI stack—from consumer devices to AI accelerators, memory, networking, robotics, automotive, and more. Our reach spans from the biggest tech companies to startups pushing the boundaries of what’s possible.