Meta’s ROIC Strategy: GEM Now, LLMs Later

Meta doesn't need the smartest AI. It needs the most profitable one.

The Street has struggled to understand Meta’s CapEx strategy. Sentiment has been all over the place, and so has the stock. Down 15% after Q3 earnings, up 15% after Q4.

But Meta articulated the same strategy the entire time, and it’s actually quite simple. GEM now, LLMs later.

GEM now: immediate, measurable ROI in the core ads business

LLMs later: incremental core gains today, substantial potential upside later

Let’s dig in. Then we’ll address potential risks.

GEM Now

Let’s start with the basics.

Facebook launched the News Feed in 2006, ads in 2007, and by 2011 the feed was algorithmically curated. From the beginning, what you see in the feed and which ads you are shown have been driven by machine learning. Meta has used AI infrastructure to drive engagement and advertising revenue for nearly two decades, evolving from CPU-based datacenters to GPU-accelerated systems.

This is not a new pivot. It is the core of the business. Meta’s business has always depended on using AI infrastructure to drive engagement and generate advertising revenue.

Today, that stack includes Andromeda for large-scale ad retrieval, Lattice as a unified prediction architecture across objectives, and GEM, Meta’s foundation model for ad ranking. They are distinct components, but together they form a single system optimized around monetization.

BTW, to learn the basics of Meta’s core business, I highly recommend this podcast episode between Eric Seufert and Matt Steiner, Meta’s Vice President of Monetization Infrastructure, Ranking & AI Foundations.

An important topic to understand is the Generative Ads Recommendation Model (GEM), Meta’s foundation model for ad ranking. Conceptually, it’s a recommendation system that makes one decision, billions of times per day: which ad should this person see right now?

Again, ML recommendation systems have always driven Meta’s business, but this latest model, GEM, introduces an important innovation. Meta figured out how to unlock scaling laws for recommenders in a similar fashion to LLMs, where more training compute yields better rankings:

“This is the first time we have found a recommendation model architecture that can scale with similar efficiency as LLMs.”

Better rankings lead to more revenue. So when Meta says they want to buy more GPUs, there’s a clear line of sight to increased revenue.

This was missed by investors, who early on punished Meta’s CapEx increase with confused statements like “well Meta isn’t a CSP, so it’ll take longer to get an ROI on that CapEx”. Ummm… have you compared cloud margins to Meta’s advertising margins? Meta’s gonna have no trouble paying off that incremental compute with incremental advertising dollars from GEM improvements, even if it takes a bit longer than renting out a GPU…

Investors need to think about the ROI of Meta’s incremental compute differently. Remember, Meta is building recommendation systems like GEM and training frontier LLMs. The ROI has to account for these separately.

So, some things to note. LLM AI labs build massive models (e.g. 1T+ params) that run in production at enormous cost (think Claude Opus). GEM doesn’t work that way. From CFO Susan Li on the recent Q425 earnings call in January,

“So we don’t use our larger model architectures like GEM for inference because their size and complexity would make it too cost-prohibitive. The way that we drive performance from those models is by using them to transfer knowledge to smaller, lightweight models that are used at runtime.”

GEM is a teacher. It absorbs massive user, content, and advertiser signals, then distills that knowledge into small, latency-sensitive models that serve ads. Inference remains cheap. Scaling GEM during training does not translate into a proportional increase in runtime model size or serving cost.

And that efficiency is improving:

“In Q3, we made improvements to GEM’s model architecture that doubled the performance benefit we get from adding a given amount of data and compute.”

Double the performance. In other words, the same incremental compute+data is now producing roughly twice the impact.

Viewed through that lens, the right question for evaluating Meta’s CapEx is not whether its models beat OpenAI or Anthropic on public benchmarks of how “smart” the AI is. Rather, GEM CapEx should be measured as incremental revenue per dollar of compute. Put differently, Meta is underwriting CapEx against an internal efficiency curve tied directly to monetization, not against leaderboard performance.

On a risk-adjusted basis, I think we can all agree GEM is a more defensible place right now to deploy capital than frontier LLMs, whose returns remain uncertain. When do OAI and Anthropic become profitable? Whereas GEM is immediately profitable… As I said before,

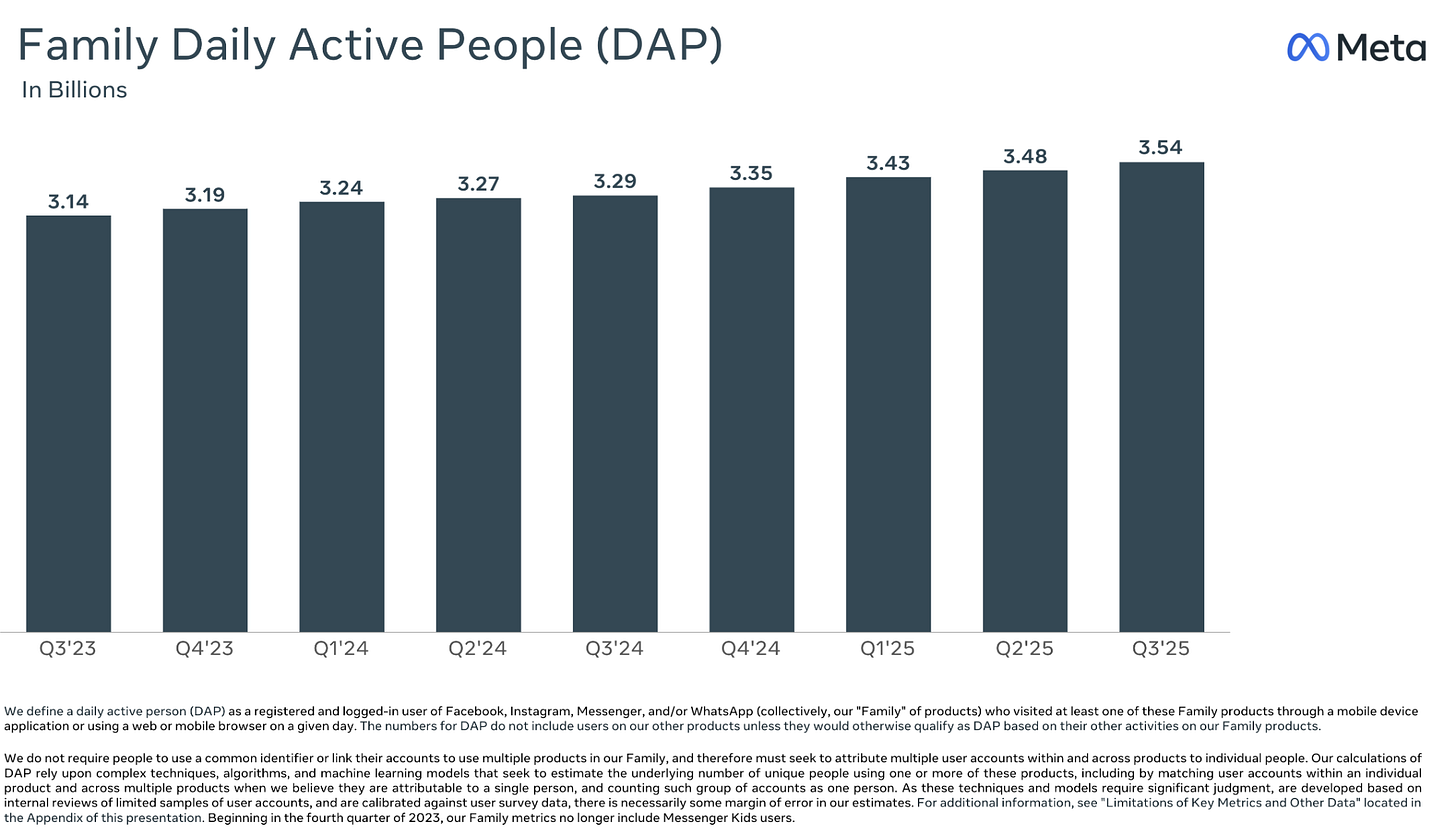

Wouldn’t others (cough cough OpenAI) LOVE to have 3.5B DAILY active users… and …. Wait for it… make almost $60 PER USER PER YEAR!

As we’ll see later, of course, Meta is investing in LLMs, and there’s upside there… but just wanted to point out that CapEx spent on GEMs is a no-brainer.

Now, you might ask, are we sure additional CapEx spent on GEM is truly driving revenue? Concrete results from the earnings call:

+5% Instagram ad conversions

+3% Facebook Feed ad conversions

+3.5% Facebook ad clicks in Q4, alongside sequence learning

With a $150B+ ad business, each of those incremental points seriously adds up.

Again, that’s a lot more straightforward than investing in larger training clusters to unlock an extra IQ point for a GenAI LLM. I’m not saying GenAI LLMs aren’t worth it — just pointing out the clear line between CapEx and measurable results.

And while incremental revenue from improved ad recommendation is great, so is decreasing the cost of that compute. After all, a dollar saved goes straight to the bottom line. One way to decrease cost is through compute diversification. Like with custom silicon (MTIA):

“We extended our Andromeda ads retrieval engine, so it can now run on NVIDIA, AMD, and MTIA. This, along with model innovations, enabled us to nearly triple Andromeda’s compute efficiency.”

“In Q1, we will extend our MTIA program to support our core ranking and recommendation training workloads, in addition to the inference workloads it currently runs.”

This looks a lot like the Microsoft Maia conversation, where Saurabh discussed building custom silicon to optimize known workloads:

I hope to talk with Meta about MTIA in the future so we can dig into the rationale and ROIC of the CapEx invested in the MTIA roadmap.

And one interesting note. While GEM focuses on ads, Meta is explicitly unifying ads ranking with organic content:

“We’re also going to start validating the use of ad signals in organic content recommendations as we continue to work towards having a more shared platform for organic and ads recommendations over time.”

One model ranking both organic and paid content across 3.5B daily users. Sounds like a good use of CapEx.

Part II: LLMs Later

So GEM is the sure thing — CapEx with a clear, measurable payoff. But Meta isn’t stopping there. Zuck is investing heavily in Meta Superintelligence Labs, co-led by Alexandr Wang and Nat Friedman, to build frontier LLMs. And this is where most of the Street’s anxiety lives, because it pattern-matches to the metaverse spending era. But the framing of “Meta needs to beat OpenAI and Anthropic” misses the point.

The way I think about it: GEM alone can deliver serious returns on invested capital. LLMs are layered on top. And some of that LLM upside is already showing up in the core business today. Some of it is more speculative — future products and features that could expand engagement in ways we can’t fully predict, yet.

Let’s start with the straightforward stuff that LLM enables:

Better understanding of what people actually want. Traditional recommendation systems are mostly pattern-matching on past behavior. LLMs bring something different, as they can reason about what someone might be interested in, even without a ton of historical data:

“Building new model architectures from the ground up that will work on top of LLMs, leveraging the world knowledge and reasoning capabilities of an LLM to better infer people’s interests.”

Making it easier for businesses to create ads. One of the biggest constraints in digital advertising is creative (photos, videos, etc). Small businesses don’t have design teams cranking out polished video ads or the means to outsource. GenAI changes that. If anyone can quickly and cheaply generate ad variations, more businesses can run better ads. That’s good for advertisers and good for Meta’s revenue. From 2026: AI Drives Performance:

AI is also powering stronger ad creative. In Q4 2025, the combined revenue run-rate of our video generation tools hit $10 billion, with quarter-over-quarter growth nearly three times faster than overall ads revenue.

Solving the cold start problem. Recommendation systems struggle with new content because there’s no engagement data to work with yet. LLMs can fill that gap because they can infer what a piece of content is about and who might care before anyone’s even seen it:

“We will work on more deeply incorporating LLMs into our existing recommendation systems… useful for content that has been more recently posted since there’s less engagement data.”

These are all additive to GEM. But there’s also the less predictable upside of future features that expand the surface area of engagement itself. One that caught my eye is AI dubbing. Meta is auto-translating videos into nine languages, and hundreds of millions of people are watching them every day.

“One area we’re already seeing promise is with AI dubbing of videos into local languages. We are now supporting nine different languages, with hundreds of millions of people watching AI-translated videos every day. This is already driving incremental time spent on Instagram, and we plan to launch support for more languages over the course of this year.”

That’s content that simply wouldn’t have reached those users before. More content people want to watch means more time spent, which means more ad inventory.

IMO the bull case is clear. But what about the risks?

Behind the paywall, we dig into the noise surrounding Meta’s AI strategy: why Meta is spending billions on frontier models when it could just use OpenAI or Anthropic as teachers, whether this is really different from the metaverse spending era that has yet to payoff, and whether there’s a hard ceiling on engagement gains when you already reach half the planet every day. We also cover a less obvious near-term catalyst for incremental time spent on Meta’s platforms.