The Nvidia Business Hiding in Plain Sight

And it's bigger than Wall Street thinks

I’ve had a lot of requests to better understand Nvidia’s autonomy business. Here it is. I meant to get this out sooner, but it took a while. So let’s get into it!

Wall Street models Nvidia’s autonomy business as a ~$1-2B automotive SoC competing with Qualcomm and Mobileye for in-car socket wins. But Nvidia’s current automotive opportunity is actually $5-10B. Why? The data center infra revenue from automotive OEMs is significantly larger than the in-car silicon, but it’s hidden within the Data Center revenue line. Let’s dig into that more, and also ask:

Why would Nvidia open-source its VLA models? Expensive to train!

How does Nvidia’s autonomy business compare to Mobileye and Qualcomm?

Is custom silicon from Tesla and Rivian a threat?

Could Chinese OEMs be a tailwind?

Does AMD have a play here?

Three Computers, Not One

At CES 2026, Jensen explained the “three computers” concept:

“Inferencing the model is essentially a robotics computer that runs in a car... But there has to be another computer that’s designed for simulation… One computer, of course, the one that we know that Nvidia builds for training the AI models.”

So three “computers” are

Frontier training cluster (e.g., NVL72 or Rubin)

Simulation node (could be on older GPUs like Hopper, or cost optimized GPUs like Blackwell RTX Pro 6000, etc)

Onboard compute in the car (e.g. Orin, Thor)

Nvidia’s reported automotive revenue is #3 only, which dramatically understates the real business. Jensen said that automotive is

already a multibillion-dollar business... venture to say somewhere between $5 billion to $10 billion...And by the end of 2030, by the end of the decade, it’s going to be a very large business

JP Morgan Research confirmed as much in its Oct 15th 2025 report From Chips to Cars: Deep Dive into ADAS and Robotaxis:

“Within its automotive industry vertical, Data Center compute/networking currently represents a more significant revenue stream for Nvidia than its in-car hardware/software business.”

Thus, Nvidia’s serviceable addressable market spans all three computers: training infra, simulation, and onboard compute. That’s a fundamentally larger SAM than any competitor focused only on the in-car socket.

Given Nvidia’s Ampere/Hopper/Blackwell dominance in data center GPUs, it’s very likely Nvidia gets paid for computers #1 and #2 for most OEMs regardless of which vendor wins the in-car socket. Nvidia can lose, but still win.

The Three-Computer Business Model

Let’s go one layer deeper.

Training is the largest revenue driver for Nvidia. Every OEM needs massive GPU compute to train AV models on their own sensor data. For example, per JPMorgan’s February 2026 Korea Autos note, Hyundai alone “signed a supply contract last October with Nvidia for 50,000 Blackwell GPUs to build its data center.” At roughly $30-40K per GPU plus networking, that’s an estimated $1.5-2.5B deal from a single automotive OEM, rivaling Nvidia’s entire reported annual automotive revenue! But it shows up in the Data Center segment, not Automotive. This is the perfect illustration of why Nvidia’s reported autonomy business looks undersized, as the largest revenue streams are hiding in a different line item.

Simulation in Omniverse is important for safe AV development. No one should be skipping computer #2. An example workflow: when something fails, the autonomy ML team reconstructs the scene using NeuRec (neural reconstruction) and tests a fix on exactly that failure.

That might seem crazy at first blush. No harm if your reaction is: what, we feel confident putting our lives in the hands of a model thats… tested on computer simulations….!?

But the fidelity of such a simulation is quite good these days.

Herman Ross, Nvidia’s Director of Simulation Ecosystem Development, noted that reconstructed sensor data is “pretty much impossible to tell apart from the real sensor. So the gap there is considered to be close to zero.”

Anyway, after reconstructing the scene, Cosmos Transfer can give you many “similar but different” scenarios for testing. So a single recorded driving scene can be transformed via prompt into a different location and different weather pattern in under 60 seconds.

In-car compute is the final piece. Nvidia has the AGX Orin and Thor portfolio, from entry-level (~100 TOPS Orin) through dual-Thor for L3/L4.

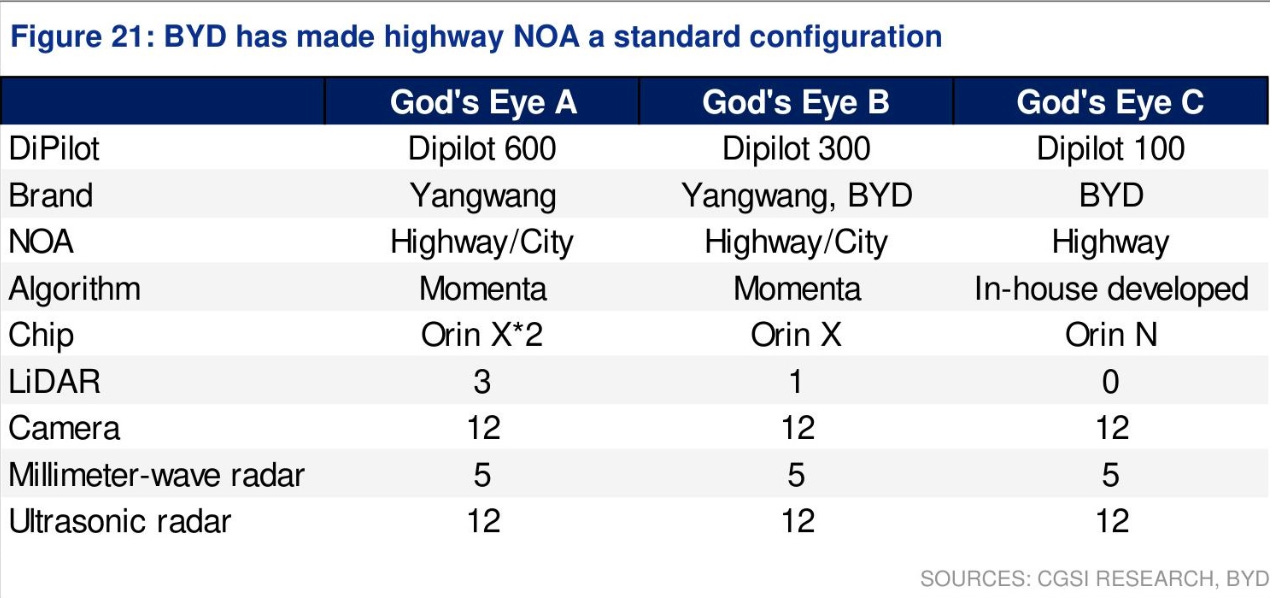

BYD, for example, uses the entry Orin for its Gods Eye highway L2+ product.

Rivian’s Gen2 platform showed how this business model plays out in practice, as it ran on dual Orin chips in the car and accessed “tens of thousands of GPUs” in the cloud for training, without owning the clusters. Read up on it here:

So that’s the framework: three computers, not one. Training dwarfs the in-car business. Simulations adds up too. Now, in the long run, I think the onboard compute market will grow significantly too. I’m bullish on that TAM long-term. Won’t more than half of new cars by 2030 come equipped with autonomy functionality? And that’s before counting commercial trucking (e.g. Aurora) and robotaxis.

Ok, so now you appreciate the Three Computer business model. But that still leaves questions.

Why would Nvidia open-source its AV models?

Nvidia has tons of OEM engagements — how do you monetize that without building a bunch of custom stacks? After all, they each have different sensors and compute configurations...

Qualcomm leaves one of the computers (training) to Nvidia, but what about simulation?

Which OEMs are actually software-defined versus just marketing it? And how might that impact adoption

What does the L2-to-L4 economic shift mean for who captures value over the next five years?

And might AMD have a play?

We’ll get into all of that below the fold.

I’m aiming for institutional-grade quality, but this is industry analysis, not investment advice.