Qualcomm’s Opportunity

Consumer Device AI, Hands-Free UX, Meta Ray-Bans, Apple, Arm, QCT and QTL, Developer Economics, AMD

I’ve been noodling on a weakness of AMD’s AI portfolio, namely the lack of AI hardware for consumer devices.

Sure, laptops will always have a slice of the consumer market. But a significant share of inference will occur on smartphones, increasingly with hands-free devices like glasses (e.g., Meta’s Ray-Bans), headphones, smart speakers, and more.

AMD’s current portfolio is not positioned to ride the incoming consumer inference wave. While Apple is the top-of-mind frontrunner in consumer electronics, Qualcomm is also a strong contender to benefit from consumer Gen AI.

This post will explain why Gen AI on consumer devices will happen and make the bull case for Qualcomm as a primary beneficiary. We will also discuss competition from Apple, the impact on Arm, similarities to Nvidia, the timing of consumer GenAI, and the bear case.

Phones — Whenever, Wherever

Smartphones’ mobility and usability make them the dominant consumer computing device. They enable users to stay connected, entertained, and productive regardless of location or time. But are they the be-all and end-all of consumer devices? Let’s unpack the underlying jobs that smartphones enable so we can reason about future consumer electronics form factors in the generative AI world.

The original use case of mobile phones was narrow in scope: transmit voice between two parties. Eventually, screens and processing power were added to the phone, enabling users to send and receive text messages and play games.

Advances in cellular networks enabled internet-connected smartphones. This bandwidth increase, combined with the inclusion of cameras in phones, spawned apps like Instagram and fueled the surge in video streaming.

We can generalize about smartphones' “jobs to be done” — they enable data generation, transmission, and consumption anytime, anywhere.

Desktop PCs can’t go with you. Laptops and tablets can, but they are too big to carry easily. Pagers and watches are mobile, but they make information consumption challenging for many use cases, like reading this article.

Smartphones are the superior computing form factor for a broad set of use cases, allowing users to generate, transmit, and consume data anytime, anywhere. As such, they will naturally be the leading platform for consumer AI inference.

The Case for Hands-Free

That said, now that we have almost two decades of iPhones under our belts, it’s time to say it plainly and out loud: the smartphone user experience sucks for many use cases.

It’s easy to think of situations where pulling out a phone to obtain information is a disruption and annoyance. Even more importantly, there are safety issues with humans staring at tiny screens in their hands or laps instead of paying attention to reality.

Learning to ride a bike as a kid today is terrifying – try being four feet tall and pushing your bike across the intersection in front of an idling F-150 whose owner is staring at the cell phone. We need a hands-free solution for drivers. It could be assistants embedded in the vehicle or any device with a speaker and microphone that the driver wears, such as headphones, a watch, or glasses.

Of course, Siri and Alexa were supposed to solve this already, but sadly, they proved too dumb.

We wanted human-like intelligent assistants who could understand what we were asking and do our bidding. Instead, we got canine-like assistants that happily respond to a few simple commands but nothing more.

How LLMs can help

LLMs enable human-like intelligent assistants, surely soon giving us the Siri we’ve always wanted. The technology is already here—see this beautiful example of an LLM-enabled assistant helping a blind man understand the world around him.

But note the friction in the user experience: why must he hold up a phone when he’s already wearing glasses?

Glasses like Meta Ray-Bans already have a camera, speakers, and microphone and could replicate this functionality with better ergonomics. Smart glasses also have a wider field of vision than a smartphone camera, which is especially helpful when the operator may not be pointing the phone directly at the heart of the scene.

Alternative Form Factors

Smart glasses demonstrate an alternative device with a better user experience than smartphones for certain use cases.

The combination of glasses with a microphone and speaker is incredibly powerful, especially because it can overlay digitally rendered visual information into the user’s field of view (i.e. augment reality).

For instance, individuals with hearing loss who rely on video captions while streaming Netflix could have access to similar captions but in everyday life! Augmented reality glasses with a multimodal LLM could listen and read lips to provide better caption quality than traditional audio-based natural language processing alone.

As another example, smart glasses could give concertgoers their “I need to record this” fix without blocking the view of other audience members.

In addition to smart glasses, other form factors, such as headphones, watches, and speakers, will also improve with Gen AI and continue to replace jobs solved by phones today.

Qualcomm’s Strengths

We’ve made the case that Generative AI will boost smartphone inference, enhance hands-free device inference, and drive innovation in new form factors.

What do these consumer AI devices, from smartphones and glasses to laptops and automotive, need? They require power-efficient AI hardware, connectivity, robust input and output capabilities, and software.

Qualcomm’s core competencies are excellent for consumer edge inference devices.

Efficient AI Compute

Consumer devices' mobility necessitates batteries. Great devices, therefore, need high performance at low power to prolong battery life.

Qualcomm has a longstanding history of power efficiency in consumer electronics. Its Snapdragon platform is at the heart of smartphones, tablets, watches, headphones, glasses, VR headsets, automotive devices —and soon laptops.

For generative AI, consumer devices need hardware to efficiently perform the matrix math at the heart of neural networks. This specialized hardware is called an NPU (Neural Processing Unit).

Qualcomm has a compelling NPU, and they have a long history of evolving the NPU within the greater SoC design to meet the needs of modern workloads. From a February 2024 whitepaper,

The NPU is built from the ground up specifically for accelerating Al inference at low power, and it has evolved along with the development of new Al use cases, models, and requirements. NPU design is heavily influenced by analyzing the overall SoC system design, memory access patterns, and bottlenecks in other processor architectures when running Al workloads. These Al workloads primarily consist of calculating neural network layers comprised of scalar, vector, and tensor math followed by a non-linear activation function.

The CPU and GPU are general-purpose processors. Designed for flexibility, they are very programmable and have 'day jobs' running the operating system, games, and other applications, which limits their available capacity for Al workloads at any point in time. The NPU is built specifically for Al - Al is its day job. It trades off some ease of programmability for peak performance, power efficiency, and area efficiency to run the large number of multiplications, additions, and other operations required in machine learning.

Input & Output

Permutations

Human input drives today’s edge computing—voice calls, text messages, video chats, emails, and so on. As such, these devices need sensors and signal processing capabilities to efficiently handle these visual (display), auditory (sound), and tactile (touch) workloads. Likewise, the human consumption of received data requires the same modalities.

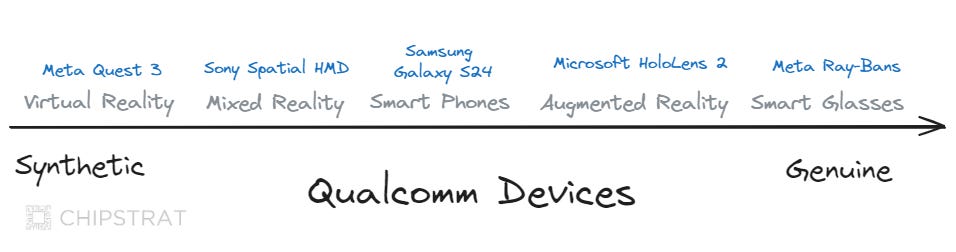

Note that modern and future edge computing form factors are merely I/O permutations that cater to different modalities and physical characteristics.

Smart glasses like Meta Ray-Bans prioritize sound over screens, using voice as the primary input and audio output for a more natural interaction. They also offer visual and tactile input options with a camera, button, touchpad, and speaker, making them ideal for hands-free use.

By analyzing form factors based on their prioritization of input and output modalities, we can better understand their suitability for various use cases. For instance, face-mounted devices like VR headsets and smart glasses offer distinct visual experiences. The Meta Quest 3 immerses users in a fully synthetic world, while the Apple Vision Pro's "passthrough" feature merges real and virtual environments. Although fully synthetic, smartphones only partially obstruct the user's field of view, giving users a mixed reality (sometimes leading to dangerous situations like distracted driving).

Multimodal LLMs enhance alternative experiences by natively understanding various types of information, including audio and video, resulting in a more satisfying user experience. They also enable innovative interactions with reality, from our aforementioned captioning to asking questions about the real world, like (pointing) what is that thing called in Spanish? and can I park here?

It's interesting to note that data generation and consumption at the edge don't have to be human-generated. Since LLMs can generate and understand natural language, we could potentially have an LLM "agent" present on the sending or receiving side of a conversation with an edge device.

Google has been doing this for a while, as documented in this NYT article Google’s Duplex Uses A.I. to Mimic Humans (Sometimes). Modern transformer-based multimodal LLMs should improve human-agent and agent-agent interactions over previous attempts with older machine-learning algorithms.

Qualcomm’s I/O

Qualcomm has experience supporting various edge computing form factors, including smartphones, smart glasses, AR, VR, wireless earbuds, watches, and automotive infotainment systems.

For example, we can swap in all Qualcomm devices into the previous graphic:

We can also glimpse Qualcomm’s low-power I/O hardware expertise with existing innovations like the Sensing Hub, a dedicated, low-power AI processor designed for use cases like noise cancellation.

Connectivity

Edge devices require connectivity to support a wide range of user needs. While there are legitimate offline use cases, such as on an airplane, they are generally the exception rather than the norm. If the devices are stationary in the home, they only need WiFi (e.g. smart speakers), and outside the home, they will need LTE or a Bluetooth or WiFi connection to an LTE-connected device (like glasses connected to a smartphone).

Enter Qualcomm with its legacy of low-power, high-performance communication chips.

For those who don’t know about Qualcomm’s telecommunications history, I highly recommend this Acquired podcast: The Complete History & Strategy of Qualcomm

Company Focus

AI hardware is useless without AI software. Therefore, AI hardware companies must heavily invest in AI software, essentially becoming full-stack AI companies. This requires vision and commitment to AI from the C-Suite.

Does Qualcomm leadership understand this?

I would say so. During the recent earnings call, CEO Cristiano Amon discussed the importance of (and implicit investment in) AI. From the prepared remarks:

Cristiano Renno Amon - QUALCOMM Incorporated - President and Chief Executive Officer

Thank you, Mauricio, and good afternoon, everyone. Thanks for joining us today. In fiscal Q2, we delivered non-GAAP revenues of $9.4 billion.

… Let me share some key highlights from the business.

As we drive intelligent computing everywhere, we're enabling the ecosystem to develop and commercialize on-device GenAl applications across smartphones next-generation PCs, XR devices, vehicles, industrial edge, robotics, networking and more.

To that end, we recently launched the Qualcomm Al Hub, a gateway for developers to enable at scale commercialization of on-device Al applications.

It features a library of approximately 100 pre-optimized Al models for devices powered by Snapdragon and Qualcomm platforms, delivering 4x faster inferencing versus nonoptimized models. As Al expands rapidly from the cloud to devices, we are extremely well positioned to capitalize on this growth opportunity given our leadership position at the edge across technologies, including on-device AI.

And again in the prepared remarks:

Christopher Caso - Wolfe Research, LLC - MD

Got it. That's helpful. As a follow-up, with regard to the Al handsets, a lot of the questions that we receive on this is, sort of why? And what are the applications, what are the reasons for a consumer to upgrade their handsets to Al. Cristiano, you talked about the developer hub that you're running, perhaps that gives you some insight into what the developers are doing, what's going on in the pipeline that will drive these Al handset sales?

Cristiano Renno Amon - QUALCOMM Incorporated - President and Chief Executive Officer

Look, it's a great question. And I want to step back and say, in general, I think Al is going to benefit all the devices. I think Al when extends to running on device besides the benefit of working alongside the cloud. They have completely new use cases, privacy, security, latency, costs, personalization, et cetera. Here's how you should look into this. In the same way that when the smartphone started, you have a handful of apps that eventually grew to thousands and hundreds of thousands of apps, and that was really the user experience.

I think we're in the very early stage. And you're starting to see some of those use cases and you have exactly that moment. Some of the phones have 10 apps and they're growing. And one of the reasons we have the Al hub because this is a new, I think, moment for the industry. It works a little bit different. It's different than how you think about the traditional app store.

You have many models, many in the open source models, They can run on a device and they can be attached or built into any application. So I think we're starting to see is a lot of developer interest. As we said in the prepared remarks, we have over 100 different models, from -- models from OpenAl to Llama 3 and many, they're quantize and optimized to the NPU when they are optimized using the tool, they run 4x faster than the model without optimization.

And those models and those use cases started to be implemented in apps, whether it's an image or associated with a camera, it's associated with language. So we're in the beginning of that transition. We really like what we see because it's really creating a reason for people to buy a new device, it's going to be the same on PC. It's going to be the same as they come to cars as well and in industrial. So it's an exciting tailwind, I think, for our strategy of actually driving computing and connectivity at the edge.

Moreover, I watched this Snapdragon Summit Tech Talk and was impressed by Qualcomm’s product management and engineering leadership focus on edge AI, from optimizing their hardware for transformer-based neural networks to significant investment in on-device AI edge software.

I also see this AI focus across the company, from R&D active in the TinyML community to product marketing whitepapers and polished YouTube marketing.

From their whitepapers, we see this full-stack approach:

What differentiates our NPU is our system approach, custom design, and fast innovation. In our system approach, we consider the architecture of each processor, the SoC system architecture, and the software infrastructure to deliver the best solution for Al. Making the right tradeoffs and decisions about hardware to add or modify requires identifying the bottlenecks of today and tomorrow. Through full-stack Al research and optimization across the application, neural network model, algorithms, software, and hardware, we find the bottlenecks. Since we custom-design the NPU and control the instruction set architecture (ISA), our architects can quickly evolve and extend the design to address bottlenecks.

This iterative improvement and feedback cycle enables continual and quick enhancements of not only our NPU but also the Al stack based on the latest neural network architectures.

Through our own Al research as well as working with the broader Al community, we are also in sync with where Al models are going. Our unique ability to do fundamental Al research supporting full-stack on-device Al development enables fast time-to-market and optimized NPU implementations around key applications, such as on-device generative Al.

And finally, Qualcomm either has either had a longstanding vision for an AI future or is great at framing its history in this light (or both!)

From their annual report:

We conduct broad, leading research and development across Al, including generative Al, from fundamental research to platform and applied research, with the goal of advancing its core capabilities (i.e., perception, reasoning and action), and scaling them across industries and use cases. With investments made in Al for over a decade, our research is diverse, and we are focused on power efficiency and personalization to make Al seamless across our everyday experiences.

If you liked this article, become a free subscriber to receive these in your inbox or the Substack app (the app is quite nice!). Feel free to forward to your friends too.

For paid subscribers, we’ll discuss the headwinds of developer economics, a Qualcomm bear case, the timing of consumer AI, similarities to Nvidia, and the impact on QCL and QTL, Apple, and Arm.

Bear Case and Timing

The primary argument against a rise in hands-free inference (aka glasses, etc) is that everyone already has a smartphone in their pocket. Although the smartphone user experience is objectively worse for a variety of use cases, today’s consumers already have habits that will be hard to overcome. Additionally, internet connectivity can drain batteries, so many hands-free devices will require a phone in your pocket as their source of data until batteries improve.

Even if a smartphone has a poor or unsafe user experience, it could be difficult to persuade most consumers to buy glasses without some additional factor to motivate them, such as a viral app or a major holiday marketing campaign.

Is it possible that more people will start using smart glasses, even if the initial adoption is slow? Drawing inspiration from countries that skipped landlines and went straight to mobile phones due to their superior user experience, let's consider who doesn't have cell phone habits and would be willing to transition directly to smart glasses.

Hint: it's not based on geography, but rather on demographics.

Children!

I’m 100% serious when I say I’d strongly consider buying my kids barebones 5G-enabled glasses before a smartphone. Such a device would ensure they could communicate with me should the need arise, yet without the distraction of screens (and access to inappropriate content). A smartwatch fits the bill too. But glasses with augmented reality could let them play Pokemon Go too 😎

Where’s the Consumer GenAI?

It’s fair to push back and ask why we haven’t seen much consumer GenAI yet. After all, GenAI already drives productivity in the workplace. However, consumer device usage is broadly motivated by leisure—YouTube, TikTok, Pokemon Go, etc.

Why aren’t we seeing GenAI transform these consumer apps yet? There’s definitely an argument that people don’t know what to do with ChatGPT’s empty textbox.

Here’s another interesting headwind: developer economics.

The consumer’s willingness to pay for leisure is highly elastic. As such, these apps often have what I call “lowest common denominator” pricing (e.g. $1.99 on the App Store), or they simply give the app away for free and generate revenue via advertising.

With these revenue models, it’s hard for consumer app developers to justify the current cost of inference. Inference-as-a-service lowers the cost for devs to experiment - just pay for what you use. We see this with the explosion of projects built atop Groq, which offers fast and low-cost inference.

However, edge inference using Qualcomm Hexagon NPU or Apple Neural Engine reduces the cost of inference to zero.

The cost to the developer of smartphone inference is zero – the customer pays for the CapEx and OpEx.

The transition from purchasing racks of Nvidia H100s to using inference-as-a-service APIs to leveraging edge NPUs mirrors the shift from buying on-premise servers to renting AWS compute to developing iPhone apps. The cost model moves from significant upfront expenses to pay-as-you-go variable costs to “free” for entirely client-side applications.

As generative AI models become increasingly capable and accessible, we will see an explosion of creative experimentation and the birth of new companies. Innovation will no longer be limited to those with substantial financial resources, opening up entrepreneurial opportunities for individuals from all walks of life.

QCT: Edge AI Pivot?

For those new to Qualcomm, they operate two primary business units: QCT, which designs and sells wireless chips, and QTL which licenses Qualcomm's extensive patent portfolio to manufacturers. QCT operates a fabless model, outsourcing chip manufacturing, and focuses on products for smartphones, tablets, laptops, vehicles, and other connected devices.

Given Qualcomm’s history, QCT has always been viewed as a wireless chip company.

An interesting question is whether QCT should reposition itself as an edge AI company with differentiating networking technology.

After all, notice the similarities with cloud AI company Nvidia: Nvidia is framed as an “accelerated computing” company. Their cloud offering (DGX server) has an AI software ecosystem atop AI hardware and differentiating networking technology (Infiniband and NVLink).

Likewise, Qualcomm’s edge offering (Snapdragon) has an AI software ecosystem atop AI hardware and differentiating networking technology.

In a world where investors value AI companies, maybe Qualcomm’s product marketing should focus on QCT as “edge AI first.”

My interpretation of Qualcomm’s current marketing is that QCT is already trying to position itself as both a networking and edge computing powerhouse — but maybe AI computing should lead in the years to come.

The Impact on QTL

A rise in consumer device inference will increase the number of wireless devices. Again, the main benefit of smartphones and hands-free devices is mobility. Yes, these devices will try to run as much local inference as possible, but edge models will always be less powerful than cloud models. This doesn’t mean edge models aren’t useful; on the contrary, “good enough” local models will have secure access to contextual information like GPS and biometrics and be a better fit for certain use cases than the cloud. But the most powerful models will always live in the cloud, ensuring form factors like smartphones and hands-free devices will always need WiFi and cellular chips in them. This is a boon for both QCT and QTL.

Apple: Friend or Foe?

Apple’s NPU will drive developers' cost of inference to zero, enabling experimentation on iOS apps. Given Apple's premium customers and their willingness to pay for apps, Apple’s App Store will be the best distribution channel for these LLM-based innovations.

If those popular LLM apps are only available on Apple devices, we might see a rise in demand for Apple's latest iPhones, as they are likely to have more memory for AI and more powerful Neural Engines. Yet Qualcomm, whose modem chips are still used in Apple devices, would also benefit from this trend.

This innovation would eventually result in demand for premium Android devices with powerful NPUs to run Android versions or clones – enter Snapdragon with Hexagon.

In summary, GenAI innovations on Apple should benefit both QTL and QCT.

Friend, not foe. For now. Maybe: frenemy.

Arm

Finally, it’s self-evident yet worth pointing out that Arm’s CPUs are used in Snapdragon SoCs. Thus, Arm also benefits from a rise in Snapdragon-based inference. Additionally, Arm is at the heart of Apple Silicon, so Arm wins when consumer inference increases — regardless of iOS, Android, or both!

Concluding Remarks

AMD's AI portfolio lacks hardware for consumer devices, missing the opportunity in the growing market of mobile and hands-free inference. While laptops are a part of the consumer market, smartphones, smart glasses, headphones, and smart speakers are becoming increasingly important for AI inference.

Qualcomm, with its power-efficient AI hardware, connectivity, robust I/O capabilities, and software expertise, is well-positioned to benefit from consumer GenAI, unlike AMD. This is due to Qualcomm's strength in low-power, high-performance communication chips, efficient AI compute with NPUs, experience supporting various edge computing form factors, and a clear focus on AI from leadership.

However, challenges remain for consumer GenAI adoption, such as the deeply ingrained smartphone habits and the high cost of inference for developers. The future of consumer AI devices lies in the balance between smartphone convenience and hands-free devices' potential, with Qualcomm likely playing a significant role.